Last week I was on the SoaSymposium in the WorldTrade Center in Rotterdam. The theme of this years episode was apparently "Next Generation SOA". On before hand I wondered what they meant with it. Apparently I missed the blog of Anne Thomas Mane that stated that SOA is dead.

It reminded me of my own blog post on the subject. In that blog-post I opposed to the statement of soa-experts of a Dutch ICT weeklet, where SOA was declared dead in favour of EDA. Because I could not remember exactly on what article I reacted back then, I got a little anxious to get in touch with her. But the reason that Anne declared SOA dead was because of the hype that rose around the acronym by the vendors, customers and media. The acronym got so loaded with pre-assumptions that it did not stand for what it promised anymore. And so many SOA projects fail because customers think they could buy the magic box of a vendor that solve every problem. Or the magic hat of the Pixar Magician Presto. And of course vendor's would love to sell this to them. If they could... To declare SOA dead as a hype with all its false promises and focus on Service Orientation, I second completely. You can't buy or sell SOA, neither as a "Silver bullet", nor as a "Magic hat". It reminded me on this company that would sell an integration product on a blade-server. Put it in a rack and your ERP's are integrated. Yeah, right. I assume that the actual implementation is less magic.

Thomas Erl and Anne had a session to cast out the "Evil SOA" and welcome the "Good SOA". I liked the music of Mike Oldfield, but after a while it got too pressing and looped through the very first part of Tubular Bells, that it almost cast me out. But it got me the nice Soa Patterns book of Thomas Erl.

I think with Thomas and Anne that Service Orientation is very important and grow in importance. But together with EDA. Well actually, I think EDA is not an architecture on it self. As with Soa and cloud: if we're going to hype this idea we fall in the same faults again. But as stated in my former post, I think that Events and Services are (or should) two thightly coupled ideas. No loose coupling here. You use these ideas to loosely couple your functionalities. But Services are useless without events. And events (either coming through an esb or as a stream) are quite useless without services that process them.

For the rest it was a good event to network. Met a few former colleagues and business-partners. Or people with I had co-acquaintances. I saw a nice presentation of Sander Hoogendoorn and Twan van der Broek on agile SOA projects combined with ERP. I'd like to learn more on that.

Thursday, 29 October 2009

Tuesday, 27 October 2009

VirtualBox: a virtual competitor of VMware?

I'm a frequent VMware user for years. Most of my setups are done in a Virtual Machine. It's very convenient because with a new host installation, just installing VMware and restoring the virtual machines gets you up and running.

One of the names that comes around on Virtualization on Linux is VirtualBox. I did not pay any attention to it since I was quite happy with VMware. I use VMware Server, since it's free and has about every feature VMware Workstation had in the latest version I used. That was Workstation 5.5 and the only thing Server lacked was Shared Folders.

But products evolve. And Server has become quite big. I found it quite a step to turn from 1.x to 2.0 since the footprint increased about 5 times! From 100MB to about 500MB.

Last week my colleague Erik asked me if I knew VirtualBox. I said I did, that is by name. A colleague on his project advised him to go for VirtualBox. So I thought I might give it a try.

Some of the features of VirtualBox that interested me were:

Pre-requisites:

Then you can Create a new VirtualMachine in VirtualBox. You can find an how-to with screen-dumps here.

For the harddisks use the existing disks of your VMware image. If the VM is a Windows Guest, the disks are probably IDE disks. You could try to use SATA as an Additional Controller (check the checkbox) and couple them to a SATA channel in order of the disks. Denote the first one bootable. If it is a Linux guest the disks are probably SCSI- LsiLogic, so choose SCSI as additional disk.

After adding the disks and other hardware, you can start it. After starting it you can install the Guest addons. These are necessary to have the appropriate drivers. Under windows, an installer is automatically started. Under Linux a virtual cdrom is mounted. Do a 'su -' to root and start the appropriate '.run' file or do a sudo:

After the install you should restart the guest.

Then you can reconfigure your display type (resolution, color-depth) and the network adapters.

Experiences

Well my first experiences are pretty positive. It is sometimes a struggle to get the display and the networking right. It's a pity that you have to recreate the VM instead of importing the VMware image itself. It seems to me that the VMware vmx is convertable to the VirtualBox xml-config file.

I found it a little inconvenient that on clicking of the VM it starts immediately. Sometimes you just want to reconfigure it.

I have to experience the peformance still. So I don't know yet if VirtualBox on this is an advantage. But the Seamless mode is really neat.

One of the names that comes around on Virtualization on Linux is VirtualBox. I did not pay any attention to it since I was quite happy with VMware. I use VMware Server, since it's free and has about every feature VMware Workstation had in the latest version I used. That was Workstation 5.5 and the only thing Server lacked was Shared Folders.

But products evolve. And Server has become quite big. I found it quite a step to turn from 1.x to 2.0 since the footprint increased about 5 times! From 100MB to about 500MB.

Last week my colleague Erik asked me if I knew VirtualBox. I said I did, that is by name. A colleague on his project advised him to go for VirtualBox. So I thought I might give it a try.

Some of the features of VirtualBox that interested me were:

- it's about 40MB! That is very small for such a complete product. I like that. I'm fond of small but feature-rich products, like TotalCommander, Xtrans and IrfanView on Windows. Apparently there are still programmers that go for smart and compact products.

- I found articles on internet that it would be faster then VMware. I also found statements that suggest it is slower. But on a simple laptop, performance would be the decision forcing feature.

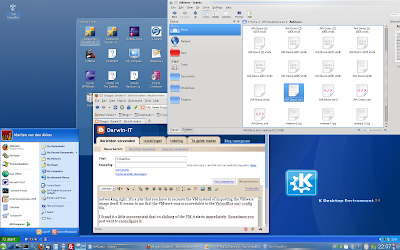

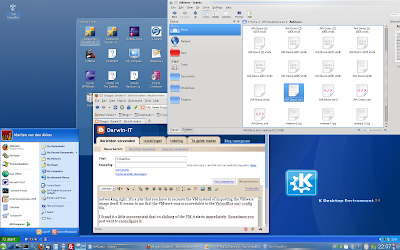

- Seamless mode: VirtualBox can have the applications run in a seperate window along with the native applications on your host. It layers the taskbar on top of the taskbar of your host. Looks neat!

- Shared Folders: this was the differating feature between Server and Workstation. I solved that lack by installing a Filezilla Server on the Windows Hosts, or using SFTP over SSH on Linux. But it is handy to have that feature.

- It's a client/standalone app: it may seem like a nonsense feature. But VMware Server comes with an Apache Tomcat application server that serves the infrastructure application. That's nice for a server based application, but on a laptop quite over done.

- 3D Acceleration: this summer I wanted to run a Windows racing game under VMware. It didn't do it. I should try it under VirtualBox with 3D acceleration on.

- But the important feature to have it work for me is that it should be able to run or import VMware images. It turns out that it cannot. You have to create a new VirtualBox image. But luckily you can base it on the virtual disks of a VMware image. Below I tell you how.

Pre-requisites:

- Install VirtualBox: download it from http://www.virtualbox.org/wiki/Downloads. Mark that there is an Open Source and a Closed Source version. Both free, but the Closed Source is the most feature-rich. Importing and exporting is only available in the closed source version. The Open Source is apparently available in many Linux Repositories. But I went for the Closed Source.

- De-install VMware tools from the guest os before migrating. VirtualBox can install it's own guest-tools with the own drivers. For windows you can do it from the software pane in the configuration screen. Under linux (rpm based) you can remove it using rpm -e VMwareTools-5.0.0-

.i386.rpm. To query the precise version you can do rpm -q VMwareTools

Then you can Create a new VirtualMachine in VirtualBox. You can find an how-to with screen-dumps here.

For the harddisks use the existing disks of your VMware image. If the VM is a Windows Guest, the disks are probably IDE disks. You could try to use SATA as an Additional Controller (check the checkbox) and couple them to a SATA channel in order of the disks. Denote the first one bootable. If it is a Linux guest the disks are probably SCSI- LsiLogic, so choose SCSI as additional disk.

After adding the disks and other hardware, you can start it. After starting it you can install the Guest addons. These are necessary to have the appropriate drivers. Under windows, an installer is automatically started. Under Linux a virtual cdrom is mounted. Do a 'su -' to root and start the appropriate '.run' file or do a sudo:

sudo /media/cdrom/VBoxLinuxAdditions-x86.run

In this case it is for a 32-bit Linux. For 64-bit Linux guests it should be the amd64 variant.After the install you should restart the guest.

Then you can reconfigure your display type (resolution, color-depth) and the network adapters.

Experiences

Well my first experiences are pretty positive. It is sometimes a struggle to get the display and the networking right. It's a pity that you have to recreate the VM instead of importing the VMware image itself. It seems to me that the VMware vmx is convertable to the VirtualBox xml-config file.

I found it a little inconvenient that on clicking of the VM it starts immediately. Sometimes you just want to reconfigure it.

I have to experience the peformance still. So I don't know yet if VirtualBox on this is an advantage. But the Seamless mode is really neat.

Labels:

Virtual Box

,

Virtual Machines

,

VMware

Thursday, 8 October 2009

JNDI in your Applications

Lately I had to make several services to be used from an application server, as a webservice or a service used from BPEL. I find it convenient to first build the application or service as a java-application and test it from jDeveloper and/or using an ant-script. Although I know that it's possible to test an application from the application server (but never did it myself) it surely is more convenient to have it tested from an ordinary java-application. Also it enables you to use the service in other topologies. Actually it's my opinion that for example a Webservice should not be more then an interface or just another exposure of an existing service or application.

If your service need a database then from an application you would just create a connection using a driver, jdbc-connection and username/password. Probably you would get those values from a property-file. But within an Application Server you would use JNDI to get a DataSource and from that retrieve a Connection.

But the methods that are called from your services within the application server should somehow get a connection in a uniform manner. Because, how would you determine if the method is called from a running standalone application or from an Application Server? The preferred approach, as I see it, is to make the application in fact agnostic of it. The retrieval of a database connection should in both cases be the same. So how to achieve this?

The solution that I found is to have the methods retrieve the database connection in all cases from a JNDI Context. To enable this, you should take care of having a DataSource registered upfront, before calling the service-implementing-methods.

JNDI Context

To understand JNDI you could take a look into the JNDI Tutorial.

It all starts with retrieving a JNDI Context. From a context you could retrieve every type of Object that is registered with it. So it not necessarly be a Database connection or DataSource, although I believe this is one of the most obvious usages of JNDI.

To retrieve a JNDI Context I create a helper class:

This package gives a Jndi Context that can be used to retrieve a DataSource kunt ophalen, using JndiContextProvider.getJndiContext().

This method retrieves an InitialContext that is cached in a private variable.

From within the application server the InitialContext is provided by the Application Server.

But when running as a standalone application a default InitialContext is provided. But this one does not have any content. Also it has no Service Provider that can deliver any objects. You could get an InitialContext by providing the InitialContext() constructor with a hash table with the java.naming.factory.initial property set to com.sun.jndi.fscontext.RefFSContextFactory.

The problem is that if you use the HashTable option, the getting of the InitialContext is not the same as within the Application Server. Fortunately if you don't provide the HashTable, the constructor is looking for the jndi.properties file, within the classpath. This file can contain several properties, but for our situation only the following is needed:

This Context factory is one of the jndi-provider-factories delivered with the standard JNDI implementation. It is a simple FileSystemContextFactory,a JNDI factory that refers to the filesystem.

Now the only thing you need to do is to bind a DataSource to the Context, right before your call the possible method, for example in the main method of your applicatoin.

That DataSource you should create using the properties from the configuration file.

To create a datasource I created another helper class: JDBCConnectionProvider:

The method createDataSource will create an OracleDataSource. Today with trial&error I discovered that from a BPEL 10.1.2 server, you should get a plain java.sql.DataSource.

Then with JndiContextProvider.bindOdsWithJNDI(OracleDataSource ods, String jndiName) you can bind the DataSource with the Filesystem Context Provider.

Having done that from your implementation code you can get a database connection using JDBCConnectionProvider.getConnection(Context context, String jndiName)

I haven't discovered how yet, but somewhere (in the filesystem I presume) the DataSource is registered. So a subsequent bind will throw an exception. But since you might want to change your connection in your property file, it might be convenient to unbind the DataSource afterwards at the end of the main-method.The method JndiContextProvider.unbindJNDIName(String jndiName) takes care of that.

What took me also quite a long time to figure out is what jar-files you need to get this working when running in a standalone application. Somehow I found quite a lot examples but no list of jar-files. I even understood (wrongly as it turned out) that the base J2SE5 or 6 should deliver these packages.

The jars you need are:

You can find them from the BPEL PM 10.1.2 tree and probably also in a Oracle 10.1.3.x Application Server or Weblogic 10.3 (or nowadays 11g). But the JSE ones can be found via

http://java.sun.com/products/jndi/downloads/index.html

There you'll find the following packages:

If your service need a database then from an application you would just create a connection using a driver, jdbc-connection and username/password. Probably you would get those values from a property-file. But within an Application Server you would use JNDI to get a DataSource and from that retrieve a Connection.

But the methods that are called from your services within the application server should somehow get a connection in a uniform manner. Because, how would you determine if the method is called from a running standalone application or from an Application Server? The preferred approach, as I see it, is to make the application in fact agnostic of it. The retrieval of a database connection should in both cases be the same. So how to achieve this?

The solution that I found is to have the methods retrieve the database connection in all cases from a JNDI Context. To enable this, you should take care of having a DataSource registered upfront, before calling the service-implementing-methods.

JNDI Context

To understand JNDI you could take a look into the JNDI Tutorial.

It all starts with retrieving a JNDI Context. From a context you could retrieve every type of Object that is registered with it. So it not necessarly be a Database connection or DataSource, although I believe this is one of the most obvious usages of JNDI.

To retrieve a JNDI Context I create a helper class:

package nl.darwin-it.crmi.jndi;

/**

* Class providing and initializing JndiContext.

*

* @author Martien van den Akker

* @author Darwin IT Professionals

*/

import java.util.Hashtable;

import javax.naming.Context;

import javax.naming.InitialContext;

import javax.naming.NamingException;

import nl.darwin-it.crmi.CrmiBase;

import oracle.jdbc.pool.OracleDataSource;

public class JndiContextProvider extends CrmiBase {

public static final String DFT_CONTEXT_FACTORY = "com.sun.jndi.fscontext.RefFSContextFactory";

public static final String DFT = "Default";

private Context jndiContext = null;

public JndiContextProvider() {

}

/**

* Get an Initial Context based on the jndiContextFactory. If jndiContextFactory is null

* the Initial context is fetched from the J2EE environment. Otherwise if jndiContextFactory contains the keyword

* "Default" (in any case) the DFT_CONTEXT_FACTORY is used as an Initial Context Factory. Otherwise

* jndiContextFactory is used.

*

*

* @param jndiContextFactory

* @return

* @throws NamingException

*/

public Context getInitialContext() throws NamingException {

final String methodName = "getInitialContext";

debug("Start "+methodName);

Context ctx = new InitialContext();

debug("End "+methodName);

return ctx;

}

/**

* Bind the Oracle Datasource to the JndiName

* @param ods

* @param jndiName

* @throws NamingException

*/

public void bindOdsWithJNDI(OracleDataSource ods, String jndiName) throws NamingException {

final String methodName = "bindOdsWithJNDI";

debug("Start "+methodName);

//Registering the data source with JNDI

Context ctx = getJndiContext();

ctx.bind(jndiName, ods);

debug("End "+methodName);

}

/**

* Unbind jndiName

* @param jndiName

* @throws NamingException

*/

public void unbindJNDIName(String jndiName) throws NamingException {

final String methodName = "unbindJNDIName";

debug("Start "+methodName);

//UnRegistering the data source with JNDI

Context ctx = getJndiContext();

ctx.unbind(jndiName);

debug("End "+methodName);

}

/**

* Set jndiContext;

*/

public void setJndiContext(Context jndiContext) {

this.jndiContext = jndiContext;

}

/**

* Get jndiContext

*/

public Context getJndiContext() throws NamingException {

if (jndiContext==null){

Context context = getInitialContext();

setJndiContext(context);

}

return jndiContext;

}

}

This package gives a Jndi Context that can be used to retrieve a DataSource kunt ophalen, using JndiContextProvider.getJndiContext().

This method retrieves an InitialContext that is cached in a private variable.

From within the application server the InitialContext is provided by the Application Server.

But when running as a standalone application a default InitialContext is provided. But this one does not have any content. Also it has no Service Provider that can deliver any objects. You could get an InitialContext by providing the InitialContext() constructor with a hash table with the java.naming.factory.initial property set to com.sun.jndi.fscontext.RefFSContextFactory.

The problem is that if you use the HashTable option, the getting of the InitialContext is not the same as within the Application Server. Fortunately if you don't provide the HashTable, the constructor is looking for the jndi.properties file, within the classpath. This file can contain several properties, but for our situation only the following is needed:

java.naming.factory.initial=com.sun.jndi.fscontext.RefFSContextFactory

This Context factory is one of the jndi-provider-factories delivered with the standard JNDI implementation. It is a simple FileSystemContextFactory,a JNDI factory that refers to the filesystem.

Now the only thing you need to do is to bind a DataSource to the Context, right before your call the possible method, for example in the main method of your applicatoin.

That DataSource you should create using the properties from the configuration file.

To create a datasource I created another helper class: JDBCConnectionProvider:

package nl.darwin-it.crmi.jdbc;

/**

* Class providing JdbcConnections

*

* @author Martien van den Akker

* @author Darwin IT Professionals

*/

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.util.Hashtable;

import javax.naming.Context;

import javax.naming.InitialContext;

import javax.naming.NamingException;

import javax.naming.NoInitialContextException;

import oracle.jdbc.pool.OracleDataSource;

public abstract class JDBCConnectionProvider {

public static final String ORCL_NET_TNS_ADMIN = "oracle.net.tns_admin";

/**

* Create a database Connection

*

* @param connectString

* @param userName

* @param Password

* @return

* @throws ClassNotFoundException

* @throws SQLException

*/

public static Connection createConnection(String driver, String connectString, String userName,

String password) throws ClassNotFoundException, SQLException {

Connection connection = null;

Class.forName(driver);

// DriverManager.registerDriver(new oracle.jdbc.driver.OracleDriver());

// @machineName:port:SID, userid, password

connection = DriverManager.getConnection(connectString, userName, password);

return connection;

}

/**

* Create a database Connection after setting TNS_ADMIN property.

*

* @param driver

* @param tnsNames

* @param connectString

* @param userName

* @param password

* @return

* @throws ClassNotFoundException

* @throws SQLException

*/

public static Connection createConnection(String driver, String tnsAdmin, String connectString, String userName,

String password) throws ClassNotFoundException, SQLException {

if (tnsAdmin != null) {

System.setProperty(ORCL_NET_TNS_ADMIN, tnsAdmin);

}

Connection connection = createConnection(driver, connectString, userName, password);

return connection;

}

/**

* Create an Oracle DataSource

* @param driverType

* @param connectString

* @param dbUser

* @param dbPassword

* @return

* @throws SQLException

*/

public static OracleDataSource createDataSource(String driverType, String connectString, String dbUser,

String dbPassword) throws SQLException {

OracleDataSource ods = new OracleDataSource();

ods.setDriverType(driverType);

ods.setURL(connectString);

ods.setUser(dbUser);

ods.setPassword(dbPassword);

return ods;

}

public static Connection getConnection(Context context, String jndiName) throws NamingException, SQLException {

//Performing a lookup for a pool-enabled data source registered in JNDI tree

Connection conn = null;

OracleDataSource ods = (OracleDataSource)context.lookup(jndiName);

conn = ods.getConnection();

return conn;

}

}

The method createDataSource will create an OracleDataSource. Today with trial&error I discovered that from a BPEL 10.1.2 server, you should get a plain java.sql.DataSource.

Then with JndiContextProvider.bindOdsWithJNDI(OracleDataSource ods, String jndiName) you can bind the DataSource with the Filesystem Context Provider.

Having done that from your implementation code you can get a database connection using JDBCConnectionProvider.getConnection(Context context, String jndiName)

I haven't discovered how yet, but somewhere (in the filesystem I presume) the DataSource is registered. So a subsequent bind will throw an exception. But since you might want to change your connection in your property file, it might be convenient to unbind the DataSource afterwards at the end of the main-method.The method JndiContextProvider.unbindJNDIName(String jndiName) takes care of that.

What took me also quite a long time to figure out is what jar-files you need to get this working when running in a standalone application. Somehow I found quite a lot examples but no list of jar-files. I even understood (wrongly as it turned out) that the base J2SE5 or 6 should deliver these packages.

The jars you need are:

- jndi.jar

- fscontext.jar

- providerutil.jar

You can find them from the BPEL PM 10.1.2 tree and probably also in a Oracle 10.1.3.x Application Server or Weblogic 10.3 (or nowadays 11g). But the JSE ones can be found via

http://java.sun.com/products/jndi/downloads/index.html

There you'll find the following packages:

- jndi-1_2_1.zip

- fscontext-1_2-beta3.zip

Conclusion

This approach gave me a database connection within the application server or from a standalone application. The name of the JNDI I fetched from a property file still. That way it is configurable from which JNDI source the connection have to be fetched (I don't like hardcodings).

In my search I found that from Oracle 11g onwards you can use the Universal Connection Pool library: http://www.oracle.com/technology/pub/articles/vasiliev-oracle-jdbc.html to have a connection pool from your application.

Labels:

Java

,

JDeveloper

,

Oracle Application Server

Monday, 21 September 2009

OPN Bootcamps delivered by Darwin-iT

Tomorrow and wednesday (22nd-23th of september) I deliver the first OPN Bootcamp in De Meern, the Netherlands on Oracle BPM Suite. The course is a two day hands-on workshop on BPM Suite Studio, addressing the basics on developing Business Processes.

There are still seats available so if you would like to join and are in the position to do so then it is still possible. More info on the course is found here.

Other bootcamps in October that will be delivered by us in De Meern are:

The bootcamps are also open for foreign partners, that is foreign to the Netherlands.

We're looking forward to meet you on one of these courses. For Dutch potential participants check-out the training pages on our website regularly. More detailed info on these courses and more dates planned are expected soon.

There are still seats available so if you would like to join and are in the position to do so then it is still possible. More info on the course is found here.

Other bootcamps in October that will be delivered by us in De Meern are:

- Oracle BI Enterprise Edition, on 6 -8 October 2009.

- AIA Implementation Boot Camp, on 27 - 29 October 2009

The bootcamps are also open for foreign partners, that is foreign to the Netherlands.

We're looking forward to meet you on one of these courses. For Dutch potential participants check-out the training pages on our website regularly. More detailed info on these courses and more dates planned are expected soon.

Labels:

BPM Suite

,

OPN

,

Oracle AIA

,

Oracle BI

Thursday, 17 September 2009

Shortcut to your VM on the desktop

With VMWare Server 2.0 you can start and stop VM's using the VMware Infrastructure Web Access application. This is a replacement of the vmware console in VMWare Server 1.0. The console is now a control in the console tab of the Virtual Machine in the Web Access. You have to click in the black area and the console will pop-up. If you're a user of Vmware Server 2.0 or other similar VMWare apps, you probably already know this.

What I recently found though is the ability to create a short cut on your desktop to a particular VM. This handy because it pops up the console where you can log in with your VMWare Administrator account and then the VM will start. From the console you can also suspend or stop using the Remote/Troubleshoot menu.

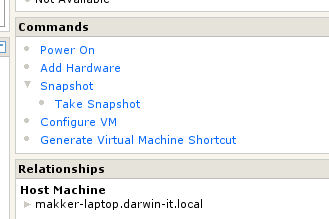

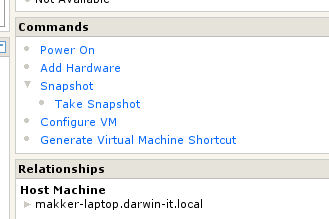

To create a shortcut on your desktop goto the summary page of your VM. In the commands portlet you find the "Generate Virtual Machine Shortcut" link:

Follow the instructions and you get an Icon with the shortcut on your desktop.

What I recently found though is the ability to create a short cut on your desktop to a particular VM. This handy because it pops up the console where you can log in with your VMWare Administrator account and then the VM will start. From the console you can also suspend or stop using the Remote/Troubleshoot menu.

To create a shortcut on your desktop goto the summary page of your VM. In the commands portlet you find the "Generate Virtual Machine Shortcut" link:

Follow the instructions and you get an Icon with the shortcut on your desktop.

Tuesday, 15 September 2009

I can do WSIF me...

Today I struggled with WSIF using the WSIF-chapter in the BPEL Cookbook.

WSIF stands for Webservice Invocation Framework and is an Apache technology that enables a Java service call to be described in a WSDL (Webservice Definition Language). The WSDL describes how the request and response documents map to the input and output objects of the java-service-call and how the Soap operation binds to the java-method-call.

BPEL Process Manager supports several java-technologies to be called using WSIF. The advantage for using WSIF is that from BPEL perspective it works exactly the same as with Webservices. The functional definition part of the WSDL (the schema’s, message definitions and the ports) are the same. The differences are in the implementation parts. In stead of using SOAP over HTTP the BPEL PM will directly call the java-service. The performance is then about the same as with calling java from embedded java. The BPELP PM has to be able to call the java-libraries. They have to be in the class path.

Altough the chapter "Using WSIF for Integration" from the bpel-cookbook, quite clearly states how to work out the java callout from BPEL, I still ran into some strugglings.

Generating the XML Facades

The BPEL cookbook suggests to use the schemac utility to compile the schema's in the WSDL to the XML Facade classes. The target directory should be <bpelpm_oracle_home>\integration\orabpel\system\classes. I found this not so handy. First of all I don't like to have a bunch of classes in this folder. It tend to get messed up. So I want to get them in a jar file. But I also found that the schemac was not able to compile the classes as such. So I started off by adding two parameters:

You could also use the -jar option to jar the compiled classes into a jar-file, what essentially my goal is (but then you should omit the -noCompile option presumably).

Also you could add a -classpath <path> option to denote a classpath.

I found that my major problem was that I missed some jar-files. So although I used schemac from the OraBPEL Developer prompt, my classpath was not set properly. Something I did not find in the cookbook. The jar-files you need to succesfully compile the classes are:

But the XML Facade classes are to be used in a java implementation class that is to be called from BPEL PM using WSIF. And since it is one project where the facades are strictly for the implementing java class I want them shipped within the same jar file as my custom-code. That way I have to deliver only one jar file to the test and production servers. So I still do not want the schemac utility to compile and jar my XML Facade classes.

To automate this I created an ant file.

Generate the XML Facades using Ant

To be able to use ant within your project it is handy to use the ANT technology scope. That way you're able to let JDeveloper generate an Ant-build-file based on your project.

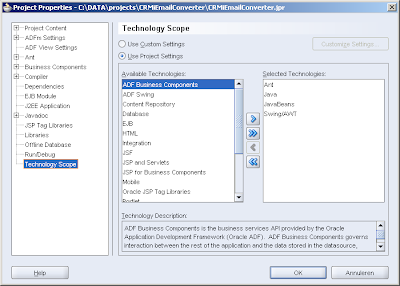

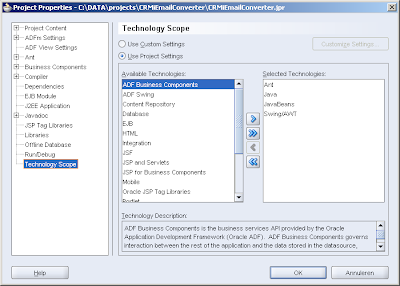

To do so go to the project properties and go to the Technology Scope entry where you can shuttle the Ant scope to the right:

Then it is handy to have a user-library created that contains the OraBPEL libraries needed to compile the XML Facades:

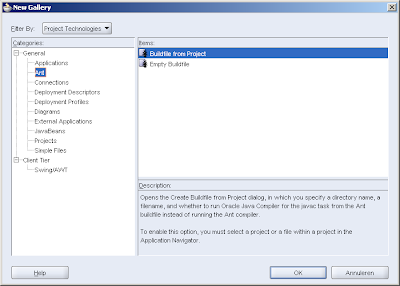

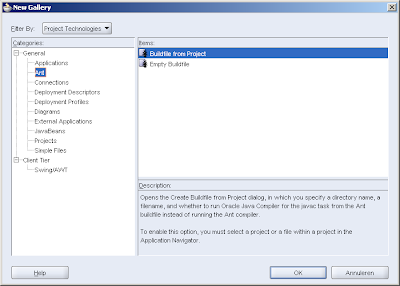

Having done that you can create a build file based on your project that contains amongst others a compile target with the appropriate classpath (including the just created user-libs) set:

Add genFacedes ant targe with the schemac task

To add the genFacades ant target for generating the facades, you have to define the schemac task in the top of your build.xml file:

For this to work you have to extend the build.properties with the bpel.home property:

Mark that the bpel home (for 10.1.2) is in the "integration\orabpel" sub-folder of the oracle-home.

Having done that, my genFacades target looks like:

This target first creates a directory where the classes are generated into (if it does not exist yet) and then delete files that are generated in that directory within an earlier iteration. This is to cleanup classes that became obsolete because of XSD changes.

Then it does the schemac task that is defined earlier in the project.

Compile and Jar the files

You can now implement your java code as suggested in the BPEL Cookbook. After that you want to compile and jar the project files.

Since we use BPEL 10.1.2 at my current customer (I know it's high time to upgrade, the team is busy with that), I found that the classes were not accepted by the BPEL PM. It lead to an unsupported class version error. BPEL 10.1.2 only supports Java SE 1.4 compiled classes. So I had to adapt my compile target:

The create-jar and deploy-jar targets look like:

WSIF stands for Webservice Invocation Framework and is an Apache technology that enables a Java service call to be described in a WSDL (Webservice Definition Language). The WSDL describes how the request and response documents map to the input and output objects of the java-service-call and how the Soap operation binds to the java-method-call.

BPEL Process Manager supports several java-technologies to be called using WSIF. The advantage for using WSIF is that from BPEL perspective it works exactly the same as with Webservices. The functional definition part of the WSDL (the schema’s, message definitions and the ports) are the same. The differences are in the implementation parts. In stead of using SOAP over HTTP the BPEL PM will directly call the java-service. The performance is then about the same as with calling java from embedded java. The BPELP PM has to be able to call the java-libraries. They have to be in the class path.

Altough the chapter "Using WSIF for Integration" from the bpel-cookbook, quite clearly states how to work out the java callout from BPEL, I still ran into some strugglings.

Generating the XML Facades

The BPEL cookbook suggests to use the schemac utility to compile the schema's in the WSDL to the XML Facade classes. The target directory should be <bpelpm_oracle_home>\integration\orabpel\system\classes. I found this not so handy. First of all I don't like to have a bunch of classes in this folder. It tend to get messed up. So I want to get them in a jar file. But I also found that the schemac was not able to compile the classes as such. So I started off by adding two parameters:

C:\DATA\projects\EmailConverter\wsdl>schemac -sourceOut ..\src -noCompile WS_EmailConverter.wsdlThe -sourceOut parameter will take care of creating source files in the denoted folder. The -noCompile folder will cause the utility not to compile the classes.

You could also use the -jar option to jar the compiled classes into a jar-file, what essentially my goal is (but then you should omit the -noCompile option presumably).

Also you could add a -classpath <path> option to denote a classpath.

I found that my major problem was that I missed some jar-files. So although I used schemac from the OraBPEL Developer prompt, my classpath was not set properly. Something I did not find in the cookbook. The jar-files you need to succesfully compile the classes are:

- orabpel.jar

- orabpel-boot.jar

- orabpel-thirdparty.jar

- xmlparserv2.jar

But the XML Facade classes are to be used in a java implementation class that is to be called from BPEL PM using WSIF. And since it is one project where the facades are strictly for the implementing java class I want them shipped within the same jar file as my custom-code. That way I have to deliver only one jar file to the test and production servers. So I still do not want the schemac utility to compile and jar my XML Facade classes.

To automate this I created an ant file.

Generate the XML Facades using Ant

To be able to use ant within your project it is handy to use the ANT technology scope. That way you're able to let JDeveloper generate an Ant-build-file based on your project.

To do so go to the project properties and go to the Technology Scope entry where you can shuttle the Ant scope to the right:

Then it is handy to have a user-library created that contains the OraBPEL libraries needed to compile the XML Facades:

Having done that you can create a build file based on your project that contains amongst others a compile target with the appropriate classpath (including the just created user-libs) set:

Add genFacedes ant targe with the schemac task

To add the genFacades ant target for generating the facades, you have to define the schemac task in the top of your build.xml file:

<taskdef name="schemac" classname="com.collaxa.cube.ant.taskdefs.Schemac"

classpath="${bpel.home}\lib\orabpel-ant.jar"/>

For this to work you have to extend the build.properties with the bpel.home property:

#Tue Sep 15 11:28:59 CEST 2009

javac.debug=on

javac.deprecation=on

javac.nowarn=off

oracle.home=c:/oracle/jdeveloper101340

bpel.home=C:\\oracle\\bpelpm\\integration\\orabpel

app.server.lib=${bpel.home}\\system\\classes

build.home=${basedir}/build

output.dir=${build.home}/classes

build.classes.home=${build.home}/classes

build.jar.home=${build.home}/jar

build.jar.name=${ant.project.name}.jar

build.jar.mainclass=nl.rabobank.crmi.emailconverter.EmailConverter

(I also added some other properties I'll use later on).Mark that the bpel home (for 10.1.2) is in the "integration\orabpel" sub-folder of the oracle-home.

Having done that, my genFacades target looks like:

<target name="genFacades" description="Generate XML Facade sources">

<echo>First delete the XML Facade sources generated earlier from ${basedir}/src/nl/darwin-it/www/INT </echo>

<mkdir dir="${basedir}/src/nl/darwin-it/www/INT"/>

<delete>

<fileset dir="${basedir}/src/nl/darwin-it/www/INT" includes="**/*"/>

</delete>

<echo>Generate XML Facade sources from ${basedir}\wsdl\INTI_WS_EmailConverter.wsdl to ${basedir}/src</echo>

<schemac input="${basedir}\wsdl\INTI_WS_EmailConverter.wsdl"

sourceout="${basedir}/src" nocompile="true"/>

</target>

This target first creates a directory where the classes are generated into (if it does not exist yet) and then delete files that are generated in that directory within an earlier iteration. This is to cleanup classes that became obsolete because of XSD changes.

Then it does the schemac task that is defined earlier in the project.

Compile and Jar the files

You can now implement your java code as suggested in the BPEL Cookbook. After that you want to compile and jar the project files.

Since we use BPEL 10.1.2 at my current customer (I know it's high time to upgrade, the team is busy with that), I found that the classes were not accepted by the BPEL PM. It lead to an unsupported class version error. BPEL 10.1.2 only supports Java SE 1.4 compiled classes. So I had to adapt my compile target:

<target name="compile" description="Compile Java source files" depends="init">

<echo>Compile Java source files</echo>

<javac destdir="${output.dir}" classpathref="classpath"

debug="${javac.debug}" nowarn="${javac.nowarn}"

deprecation="${javac.deprecation}" encoding="Cp1252" source="1.4"

target="1.4">

<src path="src"/>

</javac>

</target>

Because it is sometimes handy to compile/build within JDeveloper it is safe to also set the java-compile-version properties in the project-properties.The create-jar and deploy-jar targets look like:

<!-- create Jar -->

<target name="create-jar" description="Create Jar file" depends="compile">

<echo message="Create Jar file"></echo>

<jar destfile="${build.jar.home}/${build.jar.name}" basedir="${output.dir}">

<manifest>

<attribute name="Main-Class" value="${build.jar.mainclass}"/>

</manifest>

</jar>

</target>

<!-- deploy Jar -->

<target name="deploy-jar" description="deploy jar file" depends="create-jar">

<echo message="Deploy Jar file to ${app.server.lib}"></echo>

<copy todir="${app.server.lib}" file="${build.jar.home}/${build.jar.name}" overwrite="true" />

</target>

So this should work, together with the BPEL Cookbook it resulted in a working integration. And since it is a direct coupling it is pretty fast (although my implementation does not do much yet...

How new is new with Oracle-Sun?

Yesterday I got the announcement of an innovative new product of Oracle with Sun: "Announcing the World’s First OLTP Database Machine with Sun FlashFire Technology".

Immediately I thought: how new? Where did I hear that before? Oh yeah, last year around Open World, the database-machine with HP. I already found the announcement of the Sun-acquisition by Oracle remarkable regarding this database machine. Apparently the new thing is in the Sun FlashFire Technology and that they now specifically talk about OLTP (I can't remember if they named the Oracle-HP Database machine explicitly as such).

Later in the day I also found a declaration that Oracle is fastest on Sun hardware. This was to state that it is serious with Oracle to embrace the Sun technology. "Oracle and Sun together are hard to match." the announcement states. Oracle is going to proof with benchmarks that the world is better off with the Oracle-Sun mariage.

Immediately I thought: how new? Where did I hear that before? Oh yeah, last year around Open World, the database-machine with HP. I already found the announcement of the Sun-acquisition by Oracle remarkable regarding this database machine. Apparently the new thing is in the Sun FlashFire Technology and that they now specifically talk about OLTP (I can't remember if they named the Oracle-HP Database machine explicitly as such).

Later in the day I also found a declaration that Oracle is fastest on Sun hardware. This was to state that it is serious with Oracle to embrace the Sun technology. "Oracle and Sun together are hard to match." the announcement states. Oracle is going to proof with benchmarks that the world is better off with the Oracle-Sun mariage.

Subscribe to:

Posts

(

Atom

)