The toolstack we started with was the following

- development environment:

Designer 6i

Forms/Reports 6i

Database 10gR2

Windows Client/Server Runtime environment

Headstart Utilities

Several own scripts/utilities based on the repository API

- deployment environment:

Oracle Forms/Reports 10gR2

Oracle Database 10gR2

Oracle Application server 10gR2

Repository Object Browser

The target was to get rid of Designer 6i as support stops at the end of this year.

The fact that the deployment envrironment was allready Forms 10gR2 made life much easier. When preparing a new release the forms (and reports) are allready converted to the 10gR2 toolstack.

First I installed Oracle Developersuite 10gR2 (client software) with some additional patches

Then I created a new database which was a copy of the development database.

In this database I simply pressed [Upgrade] in the Repository Administration Utility to do the actual migration.

In less than an hour the actual migration was finished!

After this I tested all related software and everything worked fine.

Compared to the migration from Oracle Designer 1.3.2 to Oracle Designer 6i this migration went extremely fast and smooth.

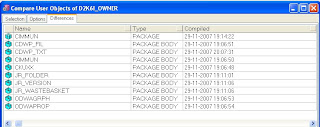

I became a litlle suspicious so I did some research to discover the differences between the two Oracle Designer Versions. I compared the two database schemes and

I found some minor changes (10 changed objects):

After this I compared the client software. On this side also little changes. They kept the same old directory names!

(cgenf61, repos6i etc. etc.).

Next I tried to search on metalink to a document with changes between the two versions. I did not find anything!

The main difference that at least I notice is the fact that Oracle Forms 10g is generated instead off Oracle Forms 6i.

I come to the conclusion that Oracle Designer 6i was the latest version of Oracle Designer with really new functionality and that all further releases are simple upgrades. It is really time to say goodbye to the tool who has been my friend for a very long time.....