Tuesday, 22 December 2009

JDeveloper 11g and SoaSuite 11g: a tight combination

This week I started preparing the OPN Bootcamp on SoaSuite 11g, that I'm to give in january (from 12th to 14th in De Meern, The Netherlands, see here). I got a prepared Virtual Machine with SoaSuite 11g installed. But it is a one-cpu 2GB VM (that I could give it more memory of course), and I would like to have my sources outside the VM. So for performance and development convenience I choose to install a seperate JDeveloper.

Of course I went for the latest version (11.1.1.2, downloaded here). But I got connection failures at configuring the Application Server connection. Struggled with it for a few hours (in between struggling with another problem, though), disabling firewalls, googling around. But no clues.

This morning with fresh curage I thought:lets not be too stubborn and try the suggested version of JDeveloper (11.1.1.1). The Soa-Infra version was: v11.1.1.0.0 - 11.1.1.1.0, according to the soa-infra page. The great thing about Jdeveloper 11g is that the studio-download is about 1GB. And having installed it, you have to download the SOA Composite editor separately via the Help>Update-functionality. It's about an addtional 230MB. And I found JDeveloper 10.1.3.x with about 700MB large already (Soa-designers included)!

But turns out to solve the problem. So no backwards-compatibility. Tight version coupling.

So if you run into connection problems between Jdeveloper 11g and SoaSuite, just check the version numbers.

Oh, and if you like to join the OPN Bootcamp in january, check out the enablement pages.

The course can be given in English if required.

Friday, 11 December 2009

Multi Operations BPEL

Most BPEL processes have just one entry and one exit point. Actually, if you create a new BPEL process you have the following templates:

- Synchronous

- Asynchronous

- Empty

For the two other templates you can have the following service flavours:

- Synchronous (Request/Reply)

- Asynchronous Request/Reply

- Fire&Forget

Normally there are two ways for invoking these process, corresponding to the type. The "process"-operation for Synchronous and the "initiate"-operation for the Asynchronous/Fire&Forget ones.

So that's about it on the invocation flavours, right?

Multiple Operations

Well, not really. There are certain cases that you need to invoke your process in different ways. In the past I build processes that are initiated by one Operation (the initiate for Asynchronous), but I needed to send signals to the running process. For example, I build a schedule process that is initiated to wait for a certain time to do a certain task, based on information in the database. And if that is changed by an end-user, because the scheduled date/time is moved, you want to reschedule the process to have it wait for the new time.

Another reason may be that you have to impelement a certain WSDL that is agreed upon (top-down-method).

You do that by adding a new operation to the port-type in the WSDL. This new operation can have other message-types for input and output then the initiate operation.

For example, if you create a new process, like the Asynchronous MultiOperationProcess, the WSDL will look like:

<?xml version="1.0" encoding="UTF-8"?> <definitions name="MultiOperationProcess" targetNamespace="http://xmlns.oracle.com/MultiOperationProcess" xmlns="http://schemas.xmlsoap.org/wsdl/" xmlns:client="http://xmlns.oracle.com/MultiOperationProcess" xmlns:plnk="http://schemas.xmlsoap.org/ws/2003/05/partner-link/"> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ TYPE DEFINITION - List of services participating in this BPEL process The default output of the BPEL designer uses strings as input and output to the BPEL Process. But you can define or import any XML Schema type and use them as part of the message types. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <types> <schema xmlns="http://www.w3.org/2001/XMLSchema"> <import namespace="http://xmlns.oracle.com/MultiOperationProcess" schemaLocation="MultiOperationProcess.xsd" /> </schema> </types> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ MESSAGE TYPE DEFINITION - Definition of the message types used as part of the port type defintions ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <message name="MultiOperationProcessRequestMessage"> <part name="payload" element="client:MultiOperationProcessProcessRequest"/> </message> <message name="MultiOperationProcessResponseMessage"> <part name="payload" element="client:MultiOperationProcessProcessResponse"/> </message> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ PORT TYPE DEFINITION - A port type groups a set of operations into a logical service unit. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <!-- portType implemented by the MultiOperationProcess BPEL process --> <portType name="MultiOperationProcess"> <operation name="initiate"> <input message="client:MultiOperationProcessRequestMessage"/> </operation> </portType> <!-- portType implemented by the requester of MultiOperationProcess BPEL process for asynchronous callback purposes --> <portType name="MultiOperationProcessCallback"> <operation name="onResult"> <input message="client:MultiOperationProcessResponseMessage"/> </operation> </portType> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ PARTNER LINK TYPE DEFINITION the MultiOperationProcess partnerLinkType binds the provider and requester portType into an asynchronous conversation. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <plnk:partnerLinkType name="MultiOperationProcess"> <plnk:role name="MultiOperationProcessProvider"> <plnk:portType name="client:MultiOperationProcess"/> </plnk:role> <plnk:role name="MultiOperationProcessRequester"> <plnk:portType name="client:MultiOperationProcessCallback"/> </plnk:role> </plnk:partnerLinkType> </definitions>

Adding an operation is simple:

<?xml version="1.0" encoding="UTF-8"?> <definitions name="MultiOperationProcess" targetNamespace="http://xmlns.oracle.com/MultiOperationProcess" xmlns="http://schemas.xmlsoap.org/wsdl/" xmlns:client="http://xmlns.oracle.com/MultiOperationProcess" xmlns:plnk="http://schemas.xmlsoap.org/ws/2003/05/partner-link/"> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ TYPE DEFINITION - List of services participating in this BPEL process The default output of the BPEL designer uses strings as input and output to the BPEL Process. But you can define or import any XML Schema type and use them as part of the message types. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <types> <schema xmlns="http://www.w3.org/2001/XMLSchema"> <import namespace="http://xmlns.oracle.com/MultiOperationProcess" schemaLocation="MultiOperationProcess.xsd"/> </schema> </types> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ MESSAGE TYPE DEFINITION - Definition of the message types used as part of the port type defintions ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <message name="MultiOperationProcessRequestAapMessage"> <part name="payload" element="client:MultiOperationProcessProcessAapRequest"/> </message> <message name="MultiOperationProcessRequestNootMessage"> <part name="payload" element="client:MultiOperationProcessProcessNootRequest"/> </message> <message name="MultiOperationProcessResponseMessage"> <part name="payload" element="client:MultiOperationProcessProcessResponse"/> </message> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ PORT TYPE DEFINITION - A port type groups a set of operations into a logical service unit. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <!-- portType implemented by the MultiOperationProcess BPEL process --> <portType name="MultiOperationProcess"> <operation name="initiateAap"> <input message="client:MultiOperationProcessRequestAapMessage"/> </operation> <operation name="initiateNoot"> <input message="client:MultiOperationProcessRequestNootMessage"/> </operation> </portType> <!-- portType implemented by the requester of MultiOperationProcess BPEL process for asynchronous callback purposes --> <portType name="MultiOperationProcessCallback"> <operation name="onResult"> <input message="client:MultiOperationProcessResponseMessage"/> </operation> </portType> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ PARTNER LINK TYPE DEFINITION the MultiOperationProcess partnerLinkType binds the provider and requester portType into an asynchronous conversation. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <plnk:partnerLinkType name="MultiOperationProcess"> <plnk:role name="MultiOperationProcessProvider"> <plnk:portType name="client:MultiOperationProcess"/> </plnk:role> <plnk:role name="MultiOperationProcessRequester"> <plnk:portType name="client:MultiOperationProcessCallback"/> </plnk:role> </plnk:partnerLinkType> </definitions>

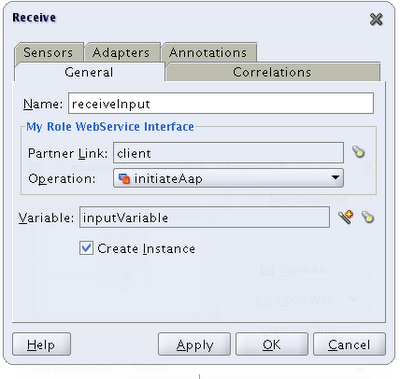

You can even change an existing operation. But then you'll have to change the initial receive:

Now you can add another Receive to cater for the receive events of the other porttype. Of course you need a Correlation Set to make sure that the event is sent to the right instance of the process.

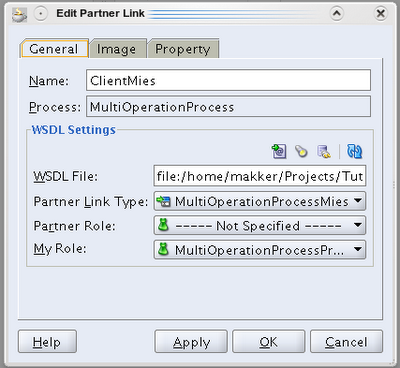

Now you can add another Receive to cater for the receive events of the other porttype. Of course you need a Correlation Set to make sure that the event is sent to the right instance of the process.We just added an operation to the Port Type. But you can also add another PortType. That means however also another Partnerlink type:

<?xml version="1.0" encoding="UTF-8"?> <definitions name="MultiOperationProcess" targetNamespace="http://xmlns.oracle.com/MultiOperationProcess" xmlns="http://schemas.xmlsoap.org/wsdl/" xmlns:client="http://xmlns.oracle.com/MultiOperationProcess" xmlns:plnk="http://schemas.xmlsoap.org/ws/2003/05/partner-link/"> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ TYPE DEFINITION - List of services participating in this BPEL process The default output of the BPEL designer uses strings as input and output to the BPEL Process. But you can define or import any XML Schema type and use them as part of the message types. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <types> <schema xmlns="http://www.w3.org/2001/XMLSchema"> <import namespace="http://xmlns.oracle.com/MultiOperationProcess" schemaLocation="MultiOperationProcess.xsd"/> </schema> </types> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ MESSAGE TYPE DEFINITION - Definition of the message types used as part of the port type defintions ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <message name="MultiOperationProcessRequestAapMessage"> <part name="payload" element="client:MultiOperationProcessProcessAapRequest"/> </message> <message name="MultiOperationProcessRequestNootMessage"> <part name="payload" element="client:MultiOperationProcessProcessNootRequest"/> </message> <message name="MultiOperationProcessRequestMiesMessage"> <part name="payload" element="client:MultiOperationProcessProcessMiesRequest"/> </message> <message name="MultiOperationProcessResponseMessage"> <part name="payload" element="client:MultiOperationProcessProcessResponse"/> </message> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ PORT TYPE DEFINITION - A port type groups a set of operations into a logical service unit. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <!-- portType implemented by the MultiOperationProcess BPEL process --> <portType name="MultiOperationProcess"> <operation name="initiateAap"> <input message="client:MultiOperationProcessRequestAapMessage"/> </operation> <operation name="initiateNoot"> <input message="client:MultiOperationProcessRequestNootMessage"/> </operation> </portType> <portType name="MultiOperationProcessMies"> <operation name="initiateMies"> <input message="client:MultiOperationProcessRequestMiesMessage"/> </operation> </portType> <!-- portType implemented by the requester of MultiOperationProcess BPEL process for asynchronous callback purposes --> <portType name="MultiOperationProcessCallback"> <operation name="onResult"> <input message="client:MultiOperationProcessResponseMessage"/> </operation> </portType> <!-- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ PARTNER LINK TYPE DEFINITION the MultiOperationProcess partnerLinkType binds the provider and requester portType into an asynchronous conversation. ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ --> <plnk:partnerLinkType name="MultiOperationProcess"> <plnk:role name="MultiOperationProcessProvider"> <plnk:portType name="client:MultiOperationProcess"/> </plnk:role> <plnk:role name="MultiOperationProcessRequester"> <plnk:portType name="client:MultiOperationProcessCallback"/> </plnk:role> </plnk:partnerLinkType> <plnk:partnerLinkType name="MultiOperationProcessMies"> <plnk:role name="MultiOperationProcessProviderMies"> <plnk:portType name="client:MultiOperationProcessMies"/> </plnk:role></plnk:partnerLinkType> </definitions>Having this you need another partnerlink in your process to get events from it:

The corresponding XSD is:

<schema attributeFormDefault="unqualified" elementFormDefault="qualified" targetNamespace="http://xmlns.oracle.com/MultiOperationProcess" xmlns="http://www.w3.org/2001/XMLSchema"> <element name="MultiOperationProcessProcessAapRequest"> <complexType> <sequence> <element name="inputAap" type="string"/> </sequence> </complexType> </element> <element name="MultiOperationProcessProcessNootRequest"> <complexType> <sequence> <element name="inputNoot" type="string"/> </sequence> </complexType> </element> <element name="MultiOperationProcessProcessMiesRequest"> <complexType> <sequence> <element name="inputMies" type="string"/> </sequence> </complexType> </element> <element name="MultiOperationProcessProcessResponse"> <complexType> <sequence> <element name="result" type="string"/> </sequence> </complexType> </element> </schema>Now you can implement about any WSDL with BPEL.

Multi Initiate

A BPEL process can have only one Receive with the initiate checkbox checked. So there is just one and only one entry point in the BPEL process. This should also be the very first activity in the process. Actually it isn't. That is, if you have implemented an exception handler (Catch/CatchAll) on the outermost-scope. Then the activities in the fault-handlers are the first in the BPEL process. And I found that there is a little pitfall in it. It might be that you have an exception handling bpel-process that you call from the catch-branches. No worries if it is a logging process (Fire&Forget). But if it returns a value to determine if you have to do a retry or an abort, then you have a Receive activity before the first Initiate-Receive!

But apparently you can not initiate your BPEL process from the other operations we introduced before. And that makes it only usefull in cases where you initiate your bpel process in one way and have it receive messages over the other operations. If you have to implement a certain WSDL with multiple operations that have to initiate the process: "Houston we have a problem".

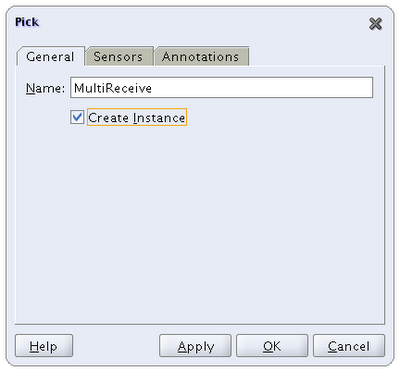

Until yesterday this is what I believed as well. But I under-estimated the use of the Pick activity!

The Pick activity splits up the initiation of the process from the messages it listens to:

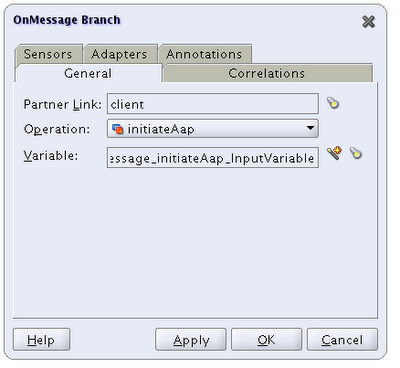

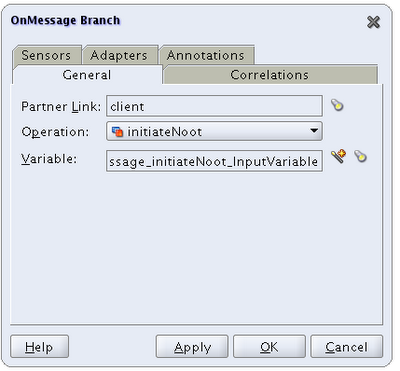

You can then remove the Timer Branch and add extra Branches. For Aap (Ape):

You can then remove the Timer Branch and add extra Branches. For Aap (Ape): For Noot (Nut)

For Noot (Nut)

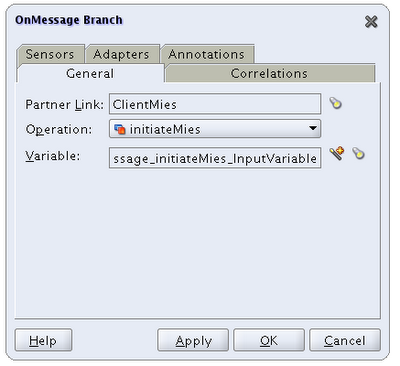

For Mies:

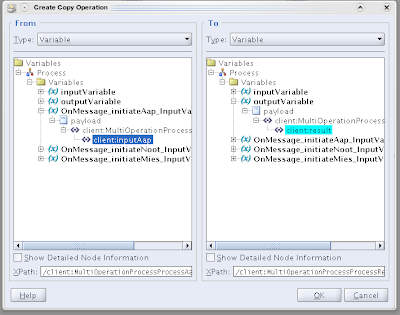

And in the branches you can for example add an assignment from the corresponding message:

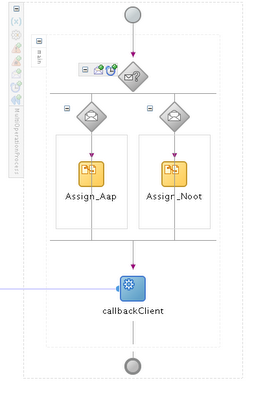

And in the branches you can for example add an assignment from the corresponding message: If you do that for each of the branches, the example process will look like:

If you do that for each of the branches, the example process will look like:

Don't forget to remove the original InputMessage-variable.

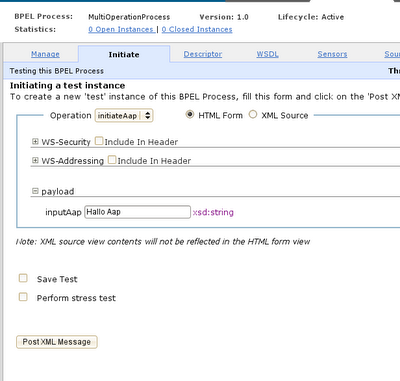

Unfortunately the BPEL Console does not support the extra porttype for the test screen. I haven't been able to test it with SoapUI yet. So I'm not sure if that works. Deleting the ClientMies parts of the process will work:

That leaves us with this process (belonging with the second WSDL above):

Conclusion

Now you can implement about any WSDL using BPEL, although apparently you're contracted to having one partnerlink-type and one port-type with multiple operations. For me it was a revelation to be able to initiate your process in multiple ways. It might come in handy when you have a case that has some different initiation message but a common process-part. Normally you would create seperate processes to receive the different messages and have them transform them to a common message-format and call the common process. But apparently we can do it all in one process too.

You can find my code-examples here.

Monday, 30 November 2009

BPEL Partnerlinks thoughts

Well at first thought the answer is simple: there is no one-to-one relationship between a partnerlink and invokes of that partnerlink. So you can introduce as many invokes on a certain partnerlink as you want.

However at second thought there are a few considerations. I'll give a few that I came up with:

- Invokes preferably are done in seperate scopes. That is: I prefer to do so. That provides the possibility to catch exceptions on that scope specifically. Variables are kept local as much as possible. Escpecially when the documents they hold can be large. At least you should think about the scope of variables. So if the invoke's input and output variabables are locally declared then they cannot be reused amongst invokes.

- If the input and output variables of the invokes are local and thus not shared, you need to build them up from scratch. This can introduce extra assign steps that might be avoided when declare the variables in a larger spanning scope. Extra assign activities with copy steps cost time. A little perhaps, but still they do. Shared variables are stored to dehydration store over and over again.

- A shared partnerlink has thus shared MCF (managed connection factory)-properties. And possibly other adapter-related properties. Normally you probably want that. So in most cases it is advisable to share partnerlinks escpecially on adapters. Then you have to configure them only once. However in some cases you might (for instance with queues) use other parameters. Maybe you have two EBS-instances and a process calling the same procedure but on seperate instances. Then you need to split them up of course.

- Be aware of transactions. The database adapter normally uses connection-pooled datasources. Database sessions are shared amongst instances. You might expect (but I won't rely on it!) that if you share a database-adapter-partnerlink (invoke it multiple times) in a synchronous process, that every invoke would use the same database-session. So for instance your application context settings, package variables, etc. are shared. Although I would check on run-time, you can use that for performance purposes. In a synchronous process you may rely on the fact that this occurs in the same database-transaction. However, if you use different partnerlinks for in fact the same Pl/Sql procedure/function call, the chance is large that you get another database-session. BPEL might need to rely on XA-transaction mechanisms. I don't think this is functionally a big problem. But might cause issues on processes with high performance requirements.

- Maintenance: having multple partnerlinks for the same database procedure or even the same purpose introduces extra maintenance cost and a higher risk. I would not expect that a BPEL-project would have multiple partnerlinks for the same purpose. So in a Change Request or Test-Issue I tend to solve/change the first partnerlink and forget about possible others. And why should I be different from fellow developers? A good developer is a lazy one...

Input and Output variables are based on the message-definitions of the partnerlinks-wsdl's. So changing one partnerlink might change the message definitions. If you reuse your partnerlinks, changing them will effect all the related variables.

Wednesday, 25 November 2009

Oracle AIA Links

- AIA Best Practice Center: http://www.oracle.com/technology/products/applications/aia/index.html

- Oracle Wiki on AIA: http://wiki.oracle.com/page/Application+Integration+Architecture

- Official AIA blog: http://blogs.oracle.com/aia/

- AIA OTN Forum: http://forums.oracle.com/forums/forum.jspa?forumID=577

- OTN AIA Foundiation pack page: http://www.oracle.com/technology/products/applications/aia/aia_fp1_index.html

- AIA Foundation Pack Tools: http://www.oracle.com/technology/products/applications/aia/aiatools.html

- OTN AIA PIP page: http://www.oracle.com/technology/products/applications/aia/aiapipnew.html

Hope you'll find it usefull as well.

Sunday, 22 November 2009

Back to OpenSuse 11.1

Of course I had to install the native NVidia driver again using the one click install. OpenSuse does not ship the propietry drivers, but does provide a simple install-solution.

The multi-media support (escpecially flash and mp3) for 11.2 is installed via this post.

However after some strubbling I came to the conclusion that VMware Server 2.0.x just won't work under 11.2. The rpm does install, but the vmware-config.pl hits several nasty compile-errors. Apperently the kernel is just too new for VMWare. I found some scripts to repair the install, based on the tar.gz-deployment. But they were mainly focussed on Ubuntu, doing some preparartions using the Ubuntu package-manager. So I re-installed 11.1.

First I tried to upgrade KDE to 4.3, but that did not work well. So the third time I just installed 11.1-vanilla.

A one-click-install for multimedia support voor 11.1 I found here (basically the same blog that posted the one-click-install for 11.2.

A lass: OpenSuse 11.2 worked really fine: my laptop ran like a sun (there is a great commercial on Wind-energy on Dutch television. With an older man that hosts city-trips for foreigners. He talks really Dutch English with litterly translated Dutch sayings on wind. My favorite: "I think You think they smell an hour in the wind"). But to me VMware is a real must.

Especially this week: I'll deliver an OPN Bootcamp on AIA in De Meern, the Netherlands, from tommorow (mentioned dates are wrong: it's this week). It's based on a VMware image with an SoaSuite+AIA installment for the labs. If you're interested: there still are some seats available.

Monday, 16 November 2009

VMware Server 2.0.2 on OpenSuse 11.1

So I downloaded the one for my system (OpenSuse 11.1 x86_64). As well as the windows variant for the bootcamp.

To upgrade an existing installed vmware server you can do (as root):

makker-laptop:/usr/bin # rpm -Uhv VMware-server-2.0.2-203138.x86_64.rpm

To query which VMware Server you have installed:

makker-laptop:/usr/bin # rpm -q VMware-server VMware-server-2.0.2-203138

To install if you're not having VMware Server:

makker-laptop:/usr/bin # rpm -ihv VMware-server-2.0.2-203138.x86_64.rpm

Then after that you should run vmware-config.pl in /usr/bin folder as root.

In my case something went wrong in installing the vsock module. I did not see that in an earlier install. This is the message I got:

make: Entering directory `/tmp/vmware-config6/vsock-only' make -C /lib/modules/2.6.27.37-0.1-default/build/include/.. SUBDIRS=$PWD SRCROOT=$PWD/. modules make[1]: Entering directory `/usr/src/linux-2.6.27.37-0.1-obj/x86_64/default' make -C ../../../linux-2.6.27.37-0.1 O=/usr/src/linux-2.6.27.37-0.1-obj/x86_64/default/. modules CC [M] /tmp/vmware-config6/vsock-only/linux/af_vsock.o CC [M] /tmp/vmware-config6/vsock-only/linux/driverLog.o CC [M] /tmp/vmware-config6/vsock-only/linux/util.o CC [M] /tmp/vmware-config6/vsock-only/linux/vsockAddr.o LD [M] /tmp/vmware-config6/vsock-only/vsock.o Building modules, stage 2. MODPOST 1 modules WARNING: "VMCIDatagram_CreateHnd" [/tmp/vmware-config6/vsock-only/vsock.ko] undefined! WARNING: "VMCIDatagram_DestroyHnd" [/tmp/vmware-config6/vsock-only/vsock.ko] undefined! WARNING: "VMCI_GetContextID" [/tmp/vmware-config6/vsock-only/vsock.ko] undefined! WARNING: "VMCIDatagram_Send" [/tmp/vmware-config6/vsock-only/vsock.ko] undefined! CC /tmp/vmware-config6/vsock-only/vsock.mod.o LD [M] /tmp/vmware-config6/vsock-only/vsock.ko make[1]: Leaving directory `/usr/src/linux-2.6.27.37-0.1-obj/x86_64/default' cp -f vsock.ko ./../vsock.o make: Leaving directory `/tmp/vmware-config6/vsock-only' Unable to make a vsock module that can be loaded in the running kernel: insmod: error inserting '/tmp/vmware-config6/vsock.o': -1 Unknown symbol in module There is probably a slight difference in the kernel configuration between the set of C header files you specified and your running kernel. You may want to rebuild a kernel based on that directory, or specify another directory.

You might not need it since Vsock is an optional module that is used in communication between guests and hosts. But luckily I found a solution here. Mr. Swerdna states that there is a flaw in the vmware-config.pl, for which he provides a patch. He also explains how to enable USB support in guests. So thank you very much mr. Swerdna.

Wednesday, 11 November 2009

PartnerlinkType not found

I would not say that I invented the principle of dynamic partnerlinks myself. But lets say that it was not my first time. And what I tried and checked, and double checked, I kept hitting the error:

At the end I was so desperate that I thought: "Okay then, lets google the error". Afterwards I could hit myself with the question: "why didn't I do this earlier?". Because the answer was simple and can be found (amongst others) here: Just clear the WSDL cache. Apparently my wsdl updates were deployed but not replacing the occurence in the cache. Ouch.

Error while invoking bean "presentation manager": Cannot find partnerLinkType 2.

PartnerLinkType "{http://www.domain.nl/PROJECT/SUBPROJECT/PUB}PROJECT_PUB" is not found in WSDL at

"http://localhost:7777/orabpel/default/MyBpelProcess/1.0/_MyBpelProcess.wsdl".

Please make sure the partnerLinkType is defined in the WSDL.

ORABPEL-08016

Cannot find partnerLinkType 2.

PartnerLinkType "{http://www.domain.nl/PROJECT/SUBPROJECT/PUB}PROJECT_PUB" is not found in WSDL at

"http://localhost:7777/orabpel/default/MyBpelProcess/1.0/_MyBpelProcess.wsdl".

Please make sure the partnerLinkType is defined in the WSDL.

To my defense I would say that dynamic partnerlinks aren't hard but a mistake is made easy and the dependencies are very sensitive. And also I had to copy an existing solution to adapt it to a new situation. And that is harder than build it yourself. But may be in the future I should consider Google as a closer friend...

EU Commitee against Sun-aqcuisition

It does impose a significant delay in the acquisition. Oracle expresses that the issues of the European Commitee are based on a misunderstanding on the database and open source marketed.

I'm curious how they see that, because it is not expressed in the quoted article.

Also the American department of Justice made explicitly clear that it approved while they're convinced that MySql does not damage the competition in the database market.

I find it curious though that the acquisition of a commercial company by another commercial company is under investigation/restriction because of a non-commercial product. I understand that the MySql holding company is in fact commercial. But you and I and every one who wants to do it can get a fork of the MySql source and start our own database. Provided that we know C++ (I conveniently assume that MySql is written in C++) , what in my case is quite rusty, and understand the sources.

So I would ask that if Oracle promises that they embrace MySql lovely caring, what would be the issue here then? And EU could just put some preconditions on the take-over. And sanctions if in a later stage Oracle turns out to not meeting those.

But then: I'm not so political. And I'm in a struggle: I'm pretty proud that one of the major forces in these matters on European Level is a Dutch woman, but at the other hand I've a history with Oracle. And I think the take over would be a good one. Or at least an interesting one. For Sun, Oracle and the ICT market.

Yesterday I found that Oracle wants to keep Sun's Glassfish Appserver as a referential implementation of J2EE. I take it with for example the SOAP toolstack Metro. Which is nice, because then Oracle has three app-servers if I'm counting right:

- OC4j (originally from Orion), still base of the J2EE parts of E-Business Suite, and probably for years to come.

- Weblogic (originally from BEA)

- Glassfish (originally from Sun)

Well questions enough. And a nice subject to philosophize further upon. But to remember/rephrase a phrase from Mike Oldfields "Songs of a distant earth": "Only time will tell"...

Thursday, 29 October 2009

Next Generation SOA

It reminded me of my own blog post on the subject. In that blog-post I opposed to the statement of soa-experts of a Dutch ICT weeklet, where SOA was declared dead in favour of EDA. Because I could not remember exactly on what article I reacted back then, I got a little anxious to get in touch with her. But the reason that Anne declared SOA dead was because of the hype that rose around the acronym by the vendors, customers and media. The acronym got so loaded with pre-assumptions that it did not stand for what it promised anymore. And so many SOA projects fail because customers think they could buy the magic box of a vendor that solve every problem. Or the magic hat of the Pixar Magician Presto. And of course vendor's would love to sell this to them. If they could... To declare SOA dead as a hype with all its false promises and focus on Service Orientation, I second completely. You can't buy or sell SOA, neither as a "Silver bullet", nor as a "Magic hat". It reminded me on this company that would sell an integration product on a blade-server. Put it in a rack and your ERP's are integrated. Yeah, right. I assume that the actual implementation is less magic.

Thomas Erl and Anne had a session to cast out the "Evil SOA" and welcome the "Good SOA". I liked the music of Mike Oldfield, but after a while it got too pressing and looped through the very first part of Tubular Bells, that it almost cast me out. But it got me the nice Soa Patterns book of Thomas Erl.

I think with Thomas and Anne that Service Orientation is very important and grow in importance. But together with EDA. Well actually, I think EDA is not an architecture on it self. As with Soa and cloud: if we're going to hype this idea we fall in the same faults again. But as stated in my former post, I think that Events and Services are (or should) two thightly coupled ideas. No loose coupling here. You use these ideas to loosely couple your functionalities. But Services are useless without events. And events (either coming through an esb or as a stream) are quite useless without services that process them.

For the rest it was a good event to network. Met a few former colleagues and business-partners. Or people with I had co-acquaintances. I saw a nice presentation of Sander Hoogendoorn and Twan van der Broek on agile SOA projects combined with ERP. I'd like to learn more on that.

Tuesday, 27 October 2009

VirtualBox: a virtual competitor of VMware?

One of the names that comes around on Virtualization on Linux is VirtualBox. I did not pay any attention to it since I was quite happy with VMware. I use VMware Server, since it's free and has about every feature VMware Workstation had in the latest version I used. That was Workstation 5.5 and the only thing Server lacked was Shared Folders.

But products evolve. And Server has become quite big. I found it quite a step to turn from 1.x to 2.0 since the footprint increased about 5 times! From 100MB to about 500MB.

Last week my colleague Erik asked me if I knew VirtualBox. I said I did, that is by name. A colleague on his project advised him to go for VirtualBox. So I thought I might give it a try.

Some of the features of VirtualBox that interested me were:

- it's about 40MB! That is very small for such a complete product. I like that. I'm fond of small but feature-rich products, like TotalCommander, Xtrans and IrfanView on Windows. Apparently there are still programmers that go for smart and compact products.

- I found articles on internet that it would be faster then VMware. I also found statements that suggest it is slower. But on a simple laptop, performance would be the decision forcing feature.

- Seamless mode: VirtualBox can have the applications run in a seperate window along with the native applications on your host. It layers the taskbar on top of the taskbar of your host. Looks neat!

- Shared Folders: this was the differating feature between Server and Workstation. I solved that lack by installing a Filezilla Server on the Windows Hosts, or using SFTP over SSH on Linux. But it is handy to have that feature.

- It's a client/standalone app: it may seem like a nonsense feature. But VMware Server comes with an Apache Tomcat application server that serves the infrastructure application. That's nice for a server based application, but on a laptop quite over done.

- 3D Acceleration: this summer I wanted to run a Windows racing game under VMware. It didn't do it. I should try it under VirtualBox with 3D acceleration on.

- But the important feature to have it work for me is that it should be able to run or import VMware images. It turns out that it cannot. You have to create a new VirtualBox image. But luckily you can base it on the virtual disks of a VMware image. Below I tell you how.

Pre-requisites:

- Install VirtualBox: download it from http://www.virtualbox.org/wiki/Downloads. Mark that there is an Open Source and a Closed Source version. Both free, but the Closed Source is the most feature-rich. Importing and exporting is only available in the closed source version. The Open Source is apparently available in many Linux Repositories. But I went for the Closed Source.

- De-install VMware tools from the guest os before migrating. VirtualBox can install it's own guest-tools with the own drivers. For windows you can do it from the software pane in the configuration screen. Under linux (rpm based) you can remove it using rpm -e VMwareTools-5.0.0-

.i386.rpm. To query the precise version you can do rpm -q VMwareTools

Then you can Create a new VirtualMachine in VirtualBox. You can find an how-to with screen-dumps here.

For the harddisks use the existing disks of your VMware image. If the VM is a Windows Guest, the disks are probably IDE disks. You could try to use SATA as an Additional Controller (check the checkbox) and couple them to a SATA channel in order of the disks. Denote the first one bootable. If it is a Linux guest the disks are probably SCSI- LsiLogic, so choose SCSI as additional disk.

After adding the disks and other hardware, you can start it. After starting it you can install the Guest addons. These are necessary to have the appropriate drivers. Under windows, an installer is automatically started. Under Linux a virtual cdrom is mounted. Do a 'su -' to root and start the appropriate '.run' file or do a sudo:

sudo /media/cdrom/VBoxLinuxAdditions-x86.run

In this case it is for a 32-bit Linux. For 64-bit Linux guests it should be the amd64 variant.After the install you should restart the guest.

Then you can reconfigure your display type (resolution, color-depth) and the network adapters.

Experiences

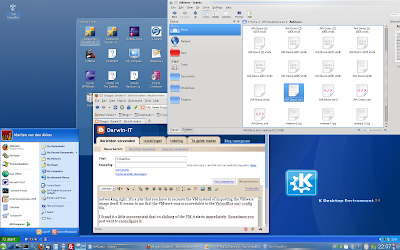

Well my first experiences are pretty positive. It is sometimes a struggle to get the display and the networking right. It's a pity that you have to recreate the VM instead of importing the VMware image itself. It seems to me that the VMware vmx is convertable to the VirtualBox xml-config file.

I found it a little inconvenient that on clicking of the VM it starts immediately. Sometimes you just want to reconfigure it.

I have to experience the peformance still. So I don't know yet if VirtualBox on this is an advantage. But the Seamless mode is really neat.

Thursday, 8 October 2009

JNDI in your Applications

If your service need a database then from an application you would just create a connection using a driver, jdbc-connection and username/password. Probably you would get those values from a property-file. But within an Application Server you would use JNDI to get a DataSource and from that retrieve a Connection.

But the methods that are called from your services within the application server should somehow get a connection in a uniform manner. Because, how would you determine if the method is called from a running standalone application or from an Application Server? The preferred approach, as I see it, is to make the application in fact agnostic of it. The retrieval of a database connection should in both cases be the same. So how to achieve this?

The solution that I found is to have the methods retrieve the database connection in all cases from a JNDI Context. To enable this, you should take care of having a DataSource registered upfront, before calling the service-implementing-methods.

JNDI Context

To understand JNDI you could take a look into the JNDI Tutorial.

It all starts with retrieving a JNDI Context. From a context you could retrieve every type of Object that is registered with it. So it not necessarly be a Database connection or DataSource, although I believe this is one of the most obvious usages of JNDI.

To retrieve a JNDI Context I create a helper class:

package nl.darwin-it.crmi.jndi;

/**

* Class providing and initializing JndiContext.

*

* @author Martien van den Akker

* @author Darwin IT Professionals

*/

import java.util.Hashtable;

import javax.naming.Context;

import javax.naming.InitialContext;

import javax.naming.NamingException;

import nl.darwin-it.crmi.CrmiBase;

import oracle.jdbc.pool.OracleDataSource;

public class JndiContextProvider extends CrmiBase {

public static final String DFT_CONTEXT_FACTORY = "com.sun.jndi.fscontext.RefFSContextFactory";

public static final String DFT = "Default";

private Context jndiContext = null;

public JndiContextProvider() {

}

/**

* Get an Initial Context based on the jndiContextFactory. If jndiContextFactory is null

* the Initial context is fetched from the J2EE environment. Otherwise if jndiContextFactory contains the keyword

* "Default" (in any case) the DFT_CONTEXT_FACTORY is used as an Initial Context Factory. Otherwise

* jndiContextFactory is used.

*

*

* @param jndiContextFactory

* @return

* @throws NamingException

*/

public Context getInitialContext() throws NamingException {

final String methodName = "getInitialContext";

debug("Start "+methodName);

Context ctx = new InitialContext();

debug("End "+methodName);

return ctx;

}

/**

* Bind the Oracle Datasource to the JndiName

* @param ods

* @param jndiName

* @throws NamingException

*/

public void bindOdsWithJNDI(OracleDataSource ods, String jndiName) throws NamingException {

final String methodName = "bindOdsWithJNDI";

debug("Start "+methodName);

//Registering the data source with JNDI

Context ctx = getJndiContext();

ctx.bind(jndiName, ods);

debug("End "+methodName);

}

/**

* Unbind jndiName

* @param jndiName

* @throws NamingException

*/

public void unbindJNDIName(String jndiName) throws NamingException {

final String methodName = "unbindJNDIName";

debug("Start "+methodName);

//UnRegistering the data source with JNDI

Context ctx = getJndiContext();

ctx.unbind(jndiName);

debug("End "+methodName);

}

/**

* Set jndiContext;

*/

public void setJndiContext(Context jndiContext) {

this.jndiContext = jndiContext;

}

/**

* Get jndiContext

*/

public Context getJndiContext() throws NamingException {

if (jndiContext==null){

Context context = getInitialContext();

setJndiContext(context);

}

return jndiContext;

}

}

This package gives a Jndi Context that can be used to retrieve a DataSource kunt ophalen, using JndiContextProvider.getJndiContext().

This method retrieves an InitialContext that is cached in a private variable.

From within the application server the InitialContext is provided by the Application Server.

But when running as a standalone application a default InitialContext is provided. But this one does not have any content. Also it has no Service Provider that can deliver any objects. You could get an InitialContext by providing the InitialContext() constructor with a hash table with the java.naming.factory.initial property set to com.sun.jndi.fscontext.RefFSContextFactory.

The problem is that if you use the HashTable option, the getting of the InitialContext is not the same as within the Application Server. Fortunately if you don't provide the HashTable, the constructor is looking for the jndi.properties file, within the classpath. This file can contain several properties, but for our situation only the following is needed:

java.naming.factory.initial=com.sun.jndi.fscontext.RefFSContextFactory

This Context factory is one of the jndi-provider-factories delivered with the standard JNDI implementation. It is a simple FileSystemContextFactory,a JNDI factory that refers to the filesystem.

Now the only thing you need to do is to bind a DataSource to the Context, right before your call the possible method, for example in the main method of your applicatoin.

That DataSource you should create using the properties from the configuration file.

To create a datasource I created another helper class: JDBCConnectionProvider:

package nl.darwin-it.crmi.jdbc;

/**

* Class providing JdbcConnections

*

* @author Martien van den Akker

* @author Darwin IT Professionals

*/

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.util.Hashtable;

import javax.naming.Context;

import javax.naming.InitialContext;

import javax.naming.NamingException;

import javax.naming.NoInitialContextException;

import oracle.jdbc.pool.OracleDataSource;

public abstract class JDBCConnectionProvider {

public static final String ORCL_NET_TNS_ADMIN = "oracle.net.tns_admin";

/**

* Create a database Connection

*

* @param connectString

* @param userName

* @param Password

* @return

* @throws ClassNotFoundException

* @throws SQLException

*/

public static Connection createConnection(String driver, String connectString, String userName,

String password) throws ClassNotFoundException, SQLException {

Connection connection = null;

Class.forName(driver);

// DriverManager.registerDriver(new oracle.jdbc.driver.OracleDriver());

// @machineName:port:SID, userid, password

connection = DriverManager.getConnection(connectString, userName, password);

return connection;

}

/**

* Create a database Connection after setting TNS_ADMIN property.

*

* @param driver

* @param tnsNames

* @param connectString

* @param userName

* @param password

* @return

* @throws ClassNotFoundException

* @throws SQLException

*/

public static Connection createConnection(String driver, String tnsAdmin, String connectString, String userName,

String password) throws ClassNotFoundException, SQLException {

if (tnsAdmin != null) {

System.setProperty(ORCL_NET_TNS_ADMIN, tnsAdmin);

}

Connection connection = createConnection(driver, connectString, userName, password);

return connection;

}

/**

* Create an Oracle DataSource

* @param driverType

* @param connectString

* @param dbUser

* @param dbPassword

* @return

* @throws SQLException

*/

public static OracleDataSource createDataSource(String driverType, String connectString, String dbUser,

String dbPassword) throws SQLException {

OracleDataSource ods = new OracleDataSource();

ods.setDriverType(driverType);

ods.setURL(connectString);

ods.setUser(dbUser);

ods.setPassword(dbPassword);

return ods;

}

public static Connection getConnection(Context context, String jndiName) throws NamingException, SQLException {

//Performing a lookup for a pool-enabled data source registered in JNDI tree

Connection conn = null;

OracleDataSource ods = (OracleDataSource)context.lookup(jndiName);

conn = ods.getConnection();

return conn;

}

}

The method createDataSource will create an OracleDataSource. Today with trial&error I discovered that from a BPEL 10.1.2 server, you should get a plain java.sql.DataSource.

Then with JndiContextProvider.bindOdsWithJNDI(OracleDataSource ods, String jndiName) you can bind the DataSource with the Filesystem Context Provider.

Having done that from your implementation code you can get a database connection using JDBCConnectionProvider.getConnection(Context context, String jndiName)

I haven't discovered how yet, but somewhere (in the filesystem I presume) the DataSource is registered. So a subsequent bind will throw an exception. But since you might want to change your connection in your property file, it might be convenient to unbind the DataSource afterwards at the end of the main-method.The method JndiContextProvider.unbindJNDIName(String jndiName) takes care of that.

What took me also quite a long time to figure out is what jar-files you need to get this working when running in a standalone application. Somehow I found quite a lot examples but no list of jar-files. I even understood (wrongly as it turned out) that the base J2SE5 or 6 should deliver these packages.

The jars you need are:

- jndi.jar

- fscontext.jar

- providerutil.jar

You can find them from the BPEL PM 10.1.2 tree and probably also in a Oracle 10.1.3.x Application Server or Weblogic 10.3 (or nowadays 11g). But the JSE ones can be found via

http://java.sun.com/products/jndi/downloads/index.html

There you'll find the following packages:

- jndi-1_2_1.zip

- fscontext-1_2-beta3.zip

Conclusion

This approach gave me a database connection within the application server or from a standalone application. The name of the JNDI I fetched from a property file still. That way it is configurable from which JNDI source the connection have to be fetched (I don't like hardcodings).

In my search I found that from Oracle 11g onwards you can use the Universal Connection Pool library: http://www.oracle.com/technology/pub/articles/vasiliev-oracle-jdbc.html to have a connection pool from your application.

Monday, 21 September 2009

OPN Bootcamps delivered by Darwin-iT

There are still seats available so if you would like to join and are in the position to do so then it is still possible. More info on the course is found here.

Other bootcamps in October that will be delivered by us in De Meern are:

- Oracle BI Enterprise Edition, on 6 -8 October 2009.

- AIA Implementation Boot Camp, on 27 - 29 October 2009

The bootcamps are also open for foreign partners, that is foreign to the Netherlands.

We're looking forward to meet you on one of these courses. For Dutch potential participants check-out the training pages on our website regularly. More detailed info on these courses and more dates planned are expected soon.

Thursday, 17 September 2009

Shortcut to your VM on the desktop

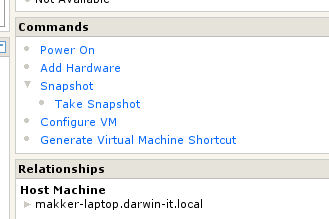

What I recently found though is the ability to create a short cut on your desktop to a particular VM. This handy because it pops up the console where you can log in with your VMWare Administrator account and then the VM will start. From the console you can also suspend or stop using the Remote/Troubleshoot menu.

To create a shortcut on your desktop goto the summary page of your VM. In the commands portlet you find the "Generate Virtual Machine Shortcut" link:

Follow the instructions and you get an Icon with the shortcut on your desktop.

Tuesday, 15 September 2009

I can do WSIF me...

WSIF stands for Webservice Invocation Framework and is an Apache technology that enables a Java service call to be described in a WSDL (Webservice Definition Language). The WSDL describes how the request and response documents map to the input and output objects of the java-service-call and how the Soap operation binds to the java-method-call.

BPEL Process Manager supports several java-technologies to be called using WSIF. The advantage for using WSIF is that from BPEL perspective it works exactly the same as with Webservices. The functional definition part of the WSDL (the schema’s, message definitions and the ports) are the same. The differences are in the implementation parts. In stead of using SOAP over HTTP the BPEL PM will directly call the java-service. The performance is then about the same as with calling java from embedded java. The BPELP PM has to be able to call the java-libraries. They have to be in the class path.

Altough the chapter "Using WSIF for Integration" from the bpel-cookbook, quite clearly states how to work out the java callout from BPEL, I still ran into some strugglings.

Generating the XML Facades

The BPEL cookbook suggests to use the schemac utility to compile the schema's in the WSDL to the XML Facade classes. The target directory should be <bpelpm_oracle_home>\integration\orabpel\system\classes. I found this not so handy. First of all I don't like to have a bunch of classes in this folder. It tend to get messed up. So I want to get them in a jar file. But I also found that the schemac was not able to compile the classes as such. So I started off by adding two parameters:

C:\DATA\projects\EmailConverter\wsdl>schemac -sourceOut ..\src -noCompile WS_EmailConverter.wsdlThe -sourceOut parameter will take care of creating source files in the denoted folder. The -noCompile folder will cause the utility not to compile the classes.

You could also use the -jar option to jar the compiled classes into a jar-file, what essentially my goal is (but then you should omit the -noCompile option presumably).

Also you could add a -classpath <path> option to denote a classpath.

I found that my major problem was that I missed some jar-files. So although I used schemac from the OraBPEL Developer prompt, my classpath was not set properly. Something I did not find in the cookbook. The jar-files you need to succesfully compile the classes are:

- orabpel.jar

- orabpel-boot.jar

- orabpel-thirdparty.jar

- xmlparserv2.jar

But the XML Facade classes are to be used in a java implementation class that is to be called from BPEL PM using WSIF. And since it is one project where the facades are strictly for the implementing java class I want them shipped within the same jar file as my custom-code. That way I have to deliver only one jar file to the test and production servers. So I still do not want the schemac utility to compile and jar my XML Facade classes.

To automate this I created an ant file.

Generate the XML Facades using Ant

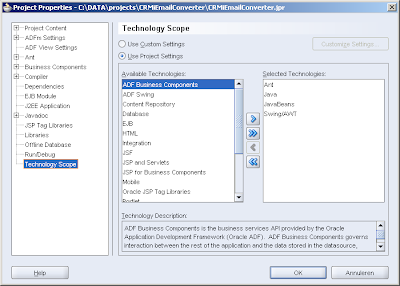

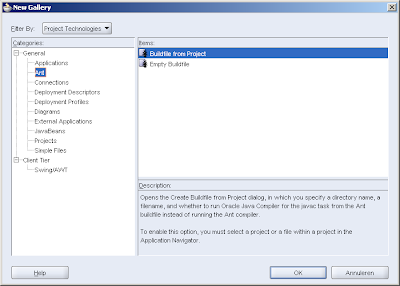

To be able to use ant within your project it is handy to use the ANT technology scope. That way you're able to let JDeveloper generate an Ant-build-file based on your project.

To do so go to the project properties and go to the Technology Scope entry where you can shuttle the Ant scope to the right:

Then it is handy to have a user-library created that contains the OraBPEL libraries needed to compile the XML Facades:

Having done that you can create a build file based on your project that contains amongst others a compile target with the appropriate classpath (including the just created user-libs) set:

Add genFacedes ant targe with the schemac task

To add the genFacades ant target for generating the facades, you have to define the schemac task in the top of your build.xml file:

<taskdef name="schemac" classname="com.collaxa.cube.ant.taskdefs.Schemac"

classpath="${bpel.home}\lib\orabpel-ant.jar"/>

For this to work you have to extend the build.properties with the bpel.home property:

#Tue Sep 15 11:28:59 CEST 2009

javac.debug=on

javac.deprecation=on

javac.nowarn=off

oracle.home=c:/oracle/jdeveloper101340

bpel.home=C:\\oracle\\bpelpm\\integration\\orabpel

app.server.lib=${bpel.home}\\system\\classes

build.home=${basedir}/build

output.dir=${build.home}/classes

build.classes.home=${build.home}/classes

build.jar.home=${build.home}/jar

build.jar.name=${ant.project.name}.jar

build.jar.mainclass=nl.rabobank.crmi.emailconverter.EmailConverter

(I also added some other properties I'll use later on).Mark that the bpel home (for 10.1.2) is in the "integration\orabpel" sub-folder of the oracle-home.

Having done that, my genFacades target looks like:

<target name="genFacades" description="Generate XML Facade sources">

<echo>First delete the XML Facade sources generated earlier from ${basedir}/src/nl/darwin-it/www/INT </echo>

<mkdir dir="${basedir}/src/nl/darwin-it/www/INT"/>

<delete>

<fileset dir="${basedir}/src/nl/darwin-it/www/INT" includes="**/*"/>

</delete>

<echo>Generate XML Facade sources from ${basedir}\wsdl\INTI_WS_EmailConverter.wsdl to ${basedir}/src</echo>

<schemac input="${basedir}\wsdl\INTI_WS_EmailConverter.wsdl"

sourceout="${basedir}/src" nocompile="true"/>

</target>

This target first creates a directory where the classes are generated into (if it does not exist yet) and then delete files that are generated in that directory within an earlier iteration. This is to cleanup classes that became obsolete because of XSD changes.

Then it does the schemac task that is defined earlier in the project.

Compile and Jar the files

You can now implement your java code as suggested in the BPEL Cookbook. After that you want to compile and jar the project files.

Since we use BPEL 10.1.2 at my current customer (I know it's high time to upgrade, the team is busy with that), I found that the classes were not accepted by the BPEL PM. It lead to an unsupported class version error. BPEL 10.1.2 only supports Java SE 1.4 compiled classes. So I had to adapt my compile target:

<target name="compile" description="Compile Java source files" depends="init">

<echo>Compile Java source files</echo>

<javac destdir="${output.dir}" classpathref="classpath"

debug="${javac.debug}" nowarn="${javac.nowarn}"

deprecation="${javac.deprecation}" encoding="Cp1252" source="1.4"

target="1.4">

<src path="src"/>

</javac>

</target>

Because it is sometimes handy to compile/build within JDeveloper it is safe to also set the java-compile-version properties in the project-properties.The create-jar and deploy-jar targets look like:

<!-- create Jar -->

<target name="create-jar" description="Create Jar file" depends="compile">

<echo message="Create Jar file"></echo>

<jar destfile="${build.jar.home}/${build.jar.name}" basedir="${output.dir}">

<manifest>

<attribute name="Main-Class" value="${build.jar.mainclass}"/>

</manifest>

</jar>

</target>

<!-- deploy Jar -->

<target name="deploy-jar" description="deploy jar file" depends="create-jar">

<echo message="Deploy Jar file to ${app.server.lib}"></echo>

<copy todir="${app.server.lib}" file="${build.jar.home}/${build.jar.name}" overwrite="true" />

</target>

So this should work, together with the BPEL Cookbook it resulted in a working integration. And since it is a direct coupling it is pretty fast (although my implementation does not do much yet...

How new is new with Oracle-Sun?

Immediately I thought: how new? Where did I hear that before? Oh yeah, last year around Open World, the database-machine with HP. I already found the announcement of the Sun-acquisition by Oracle remarkable regarding this database machine. Apparently the new thing is in the Sun FlashFire Technology and that they now specifically talk about OLTP (I can't remember if they named the Oracle-HP Database machine explicitly as such).

Later in the day I also found a declaration that Oracle is fastest on Sun hardware. This was to state that it is serious with Oracle to embrace the Sun technology. "Oracle and Sun together are hard to match." the announcement states. Oracle is going to proof with benchmarks that the world is better off with the Oracle-Sun mariage.

Thursday, 10 September 2009

Marketing bleating

Today I got an unrequested marketing mail (that would fit the term Spam right?), that stated that a certain well-known producer of Security Applications "launches as the first malware vendor their newst product: XXX ", being a protection product for Virtual Machines.

Now, that starts me thinking. Am I going to buy Virus or Spam prevention-software from a vendor that calls itself a "malware vendor"? Or is this company eating from two walls? Feeding it's own mouth? It even let me query wiki, to be certain that I understand it right: "mallware is a 'collection-name' of software that is destructive or 'bad-willing' and is a contraction of the English words mallicious software" (free translated from the Dutch wiki-definition).

So apparently there was another marketing-person not thinking correctly about this communique.

Tuesday, 1 September 2009

Process E-Mail with Oracle BPEL PM 10.1.2

The BPEL version we use is still 10.1.2. I googled around and to find some examples. I found a pretty good example from Lucas Jellema, who based his one on an article byMatt Wright.

To initiate BPEL processes on incoming email is done through an Activation Agent. This is described in chapter 17. 3.6 of the documentation.

Lucas used Apache James as a demo email server, described here. It is really simple to set it up. Installation is nothing more than unzip the download, and start it using run.bat or run.sh from the <james_home>/bin folder. Then you have to kill it once, since the first run is not really a startup. At first startup it apparently unpacks some libraries, etc. After the second startup you can telnet to it using telnet localhost 4555. Login as root with password root and then you can add a user using adduser akkerm akkerm.

I had to process emails with attachements too. Soo I used Lucas' example java and extended it a little to be able to add an attachement also. Here is my code:

package sendmail;

import java.io.File;

import java.util.Properties;

import javax.activation.DataHandler;

import javax.activation.FileDataSource;

import javax.mail.Address;

import javax.mail.Message;

import javax.mail.Multipart;

import javax.mail.Session;

import javax.mail.Transport;

import javax.mail.internet.InternetAddress;

import javax.mail.internet.MimeBodyPart;

import javax.mail.internet.MimeMessage;

import javax.mail.internet.MimeMultipart;

public class EmailSender {

public EmailSender() {

}

public static void sendEmail(String to, String subject, String from,

String content, String attachment) throws Exception {

Properties props = new Properties();

props.setProperty("mail.transport.protocol", "smtp");

props.setProperty("mail.host", "localhost");

props.setProperty("mail.user", "akkerm");

props.setProperty("mail.password", "");

Session mailSession = Session.getDefaultInstance(props, null);

Transport transport = mailSession.getTransport();

MimeMessage message = new MimeMessage(mailSession);

message.addFrom(new Address[] { new InternetAddress("akkerm@localhost",

from) }); // the reply to email address and a logical descriptor of the sender of the email!

message.setSubject(subject);

message.addRecipient(Message.RecipientType.TO,

new InternetAddress(to));

// Add content

MimeBodyPart mbp1 = new MimeBodyPart();

mbp1.setText(content);

MimeBodyPart mbp2 = new MimeBodyPart();

// attach the file to the message

FileDataSource fds = new FileDataSource(attachment);

mbp2.setDataHandler(new DataHandler(fds));

mbp2.setFileName(fds.getName());

// create the Multipart and its parts to it

Multipart mp = new MimeMultipart();

mp.addBodyPart(mbp1);

mp.addBodyPart(mbp2);

// add the Multipart to the message

message.setContent(mp);

transport.connect();

transport.sendMessage(message,

message.getRecipients(Message.RecipientType.TO));

transport.close();

}

public static void main(String[] args) throws Exception {

String content = "Some test content from akkerm.";

EmailSender.sendEmail("akkerm@localhost", "An interesting message",

"THE APP", content, "c:/temp/in/excelfile.xls");

}

}

To be able to fetch the attachment of the mail I did the following. First I added a variable, called Multipart:

<variable name="MultiPart" element="ns1:multiPart">

Where ns1: is xmlns:ns1="http://services.oracle.com/bpel/mail", the name space of the added XMLSchema. When you receive the message, you get a content-element. But to be able to handle it as a multiple parts you have to copy it to a variable based on the multipart element from the XSD.

Then you can count the number of parts using the expression:

count(bpws:getVariableData('inputVariable','payload',

'/ns1:mailMessage/ns1:content/ns1:multiPart/ns1:bodyPart'))You can copy that in a variable that's called PartsCount of type xsd:int for example. To be able to loop over the parts you need an index also:

<variable name="PartsCount" type="xsd:int"/>

<variable name="PartIndex" type="xsd:int"/>

Then you can use a while loop with loop condition like:

bpws:getVariableData('PartIndex')<=bpws:getVariableData('PartsCount')Within the loop you can copy the body part to a variable content that is also based on the multipart-element:

<variable name="Content" element="ns1:multiPart"/>

using the expression:

bpws:getVariableData('MultiPart','/ns1:multiPart/ns1:content/ns1:multiPart/ns1:bodyPart[bpws:getVariableData("PartIndex")]'You need to copy that to the /ns1:multiPart/ns1:bodyPart part of the Content variable.

The content of a binary attachment is base64-encoded. If you want to save that to a file there are several options. Some of them are listed here. The most simple way to do that is to use a file write adapter. Configure it using the adapter wizard, and choose an "Opaque" xsd.

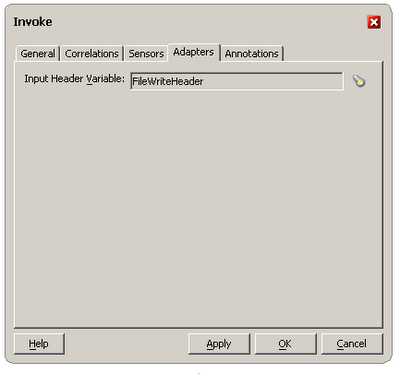

To be able to determine with which filename the file is written, you can use an adapater-header variable. Create a variable with the message type based on the header-type of the adapter-wsdl:

<variable name="FileWriteHeader" messageType="ns5:OutboundHeader_msg"/>

In the Invoke of the FileWrite partnerlink, you can add it as a header variable to use.

I already wrote about mcf-properties and headervariables here. I found that in SoaSuite 10.1.3 you can direct the fileadapter also to write to another directory then specified in the adapter wizard. But aparently this does not work in 10.1.2. You can override the directory via the header variable, by adding the property to the wsdl. But it keeps writing to the directory specified at designtime. The filename however is used from the header variable, so you that you can change.

Wednesday, 26 August 2009

Testing and exception handling with Ant

The importer used a property file in which it relates to the file with the data to import. So for every test-case I had to create a test-file and a configuration file. I named them like:

- test001_configuration.properties

- test001_medewlist.txt

- test002_configuration.properties

- test002_medewlist.txt

For the importer a working ant build file was created with a run target. So I thought it would be nice to have a seperate testBuild.xml file that does the job.

So I created this file with first a simple copy-target that copies a backupped original configuration file back to the conf directory. I want to leave the project as it is committed to subversion after my test.

Then I created a macro-definition that gets the test-configuration-file as an attribute.

It copies this test configuration file over the actual configuration file. Then it tries to call the run-target of the main build file. And then it copies the original one back.

I found that the run-target of the original file could fail and in that case it should also restore the original configuration file, in stead of failing my test-script. With ant-contrib you can use a try-catch-finally the same way as in Java.

Here's my resulting testBuild.xml:

<?xml version="1.0" encoding="UTF-8"?>

<project name="TestDirectoryServiceSynchronizer" basedir=".">

<!-- Task Definition for ant-contrib.jar -->

<taskdef resource="net/sf/antcontrib/antlib.xml">

<classpath>

<pathelement location="c:/repository/generic/support/common/lib/ant-contrib.jar"/>

</classpath>

</taskdef>

<target name="copyOrgConfig">

<echo>Copy Original Config-file Back</echo>

<copy toFile="conf/configuration.properties" overwrite="true">

<fileset file="test/configuration.properties.org" />

</copy>

</target>

<target name="001-AddOneEmployee">

<test-case configFile="test001_configuration.properties" />

</target>

<target name="002-RemoveOneEmployee">

<test-case configFile="test002_configuration.properties" />

</target>

<macrodef name="test-case">

<attribute name="configFile" default="attribute configFile not set" />

<sequential>

<echo>Copy Config-file @{configFile}</echo>

<copy toFile="conf/configuration.properties" overwrite="true">

<fileset file="test/@{configFile}" />

</copy>

<!-- call run -->

<echo>Run DirectorySynchronizer</echo>

<trycatch>

<try>

<ant antfile="${basedir}/build.xml" target="run" />

</try>

<catch>

<echo>Investigate exceptions in the run!</echo>

</catch>

<finally>

<antcall target="copyOrgConfig" />

</finally>

</trycatch>

</sequential>

</macrodef>

</project>

Tuesday, 21 July 2009

Installing Oracle Forms 11

After extracting the two downloaded files ..disk1_1of2 and ..disk1_2of2 I got 4 (;-)) disk directories (disk1, disk2, disk3 and disk4).

I doubleclicked the setup.exe in the root of the disk1 folder. The following splash screen popped up:

After a while the following screen pops-up:

The interface of the installatation of Oracle Forms (and Reports and Discoverer) has signifantly changed. Step 1 out of 16….

Before proceeding make sure you have installed the web logic server. I installed Jdeveloper 11g before so I already had the weblogic server installed.

The oc4j that I used for running Oracle Forms 10g on my laptop is no longer supported. The same goes for Oracle Jinitiator: No longer supported. Instead off that you have to use the sun java plugin (also owned by Oracle now).

Choose the correct installation type.

Checking prerequisites….

Choose the Oracle Middleware location. This is where I installed the middleware home of Jdeveloper 11g

Choose the correct components. I want to install Oracle Forms and Reports including the correct server components.

And after accepting the defaults in the following steps I finally could choose [Finish] and the installation starts.

After half an hour of installation I reached 100%, but I was not able to push the [Finish] button. What is happening? In the left window I see that after the installation process the configuration process should start. But all I see is Setup Completed. After staring some minutes to the screen I suddenly see that the 100% progress decreases to 97%???>

The installer installs a Oneoff patch which probably was downloaded before from the internet. This is new, in earlier versions you could install Oracle Forms without having a connection to the internet. You had to monitor metalink to see if there any patches and had to install them separately. I am wondering if there is an option in Forms Builder to check for updates, like there is in Jdeveloper!

After installing the OneOff Patch the configuration is started.

After 90 minutes the installation is finished. Let’s see if I can start forms builder. In the start menu it is listed under Oracle Classic instance. (on OTN it is called traditional.....)

And it works.

Unfortunately I see no option check for updates.