Introduction

At a recent customer I got the assignment to implement a SAML 2.0 configuration.

The customer is in this setup a Service Provider. They provide a student-administration application for the Dutch Higher Education Sector, like Colleges and Universities. The application conventionally is implemented on premise. But they like to move to a SaaS model. One institute is going to use the application from 'the cloud'. In the Dutch education sector, an organization called SurfConext serves as an authentication broker.

A good schematic explanation of the setup is in the

Weblogic 11g docs:

When a user connects to the application, Weblogic finds that the user is not authenticated: it lacks a SAML2.0 token (2). So when configured correctly the browser is rerouted to SurfConext (3). On an authentication request SurfConext displays a so-called ‘Where Are You From’ (WAYF) page, on which a user can choose the institute to which he or she is connected. SurfConext then provides a means to enter the username and password (4). On submit SurfConext validates the credentials against the actual IdP, which is provided by the user’s institute (5). On a valid authentication, SurfConext provides a SAML2.0 token identifying the user with possible assertions (6). The page is refreshed and redirected to the landing page of the application (7).

For Weblogic SurfConext is in fact the Identity Profider, although in fact, based on the choice on the WAYF page, it reroutes the authentication request to the IdP of the particular institute.

Unfortunately I did not find a how-to of that particular setup in the docs. Although I found

this. But I did find the following blog:

https://blogs.oracle.com/blogbypuneeth/entry/steps_to_configure_saml_2, that helped me much. Basically the setup is only the service provider part of that description.

So let me walk you through it. This is a larger blog, in fact I copy&paste larger parts from the configuration document I wrote for the customer

Configure Service provider

Pre-Requisites

To be able to test the setup against a test-IdP of SurfConext the configured Weblogic need to be reachable from internet. Appropriate firewall and proxy-server configuration need to be done upfront to enable both SurfConext to connect to the Weblogic Server as well as a remote user.

All configuration regarding url’s need to be done using the outside url’s configured above.

A PC with a direct internet connection that is enabled to connect through these same URL’s is needed to test the configuration. When connecting a pc to the intranet of the customer enables the pc to connect to internet, but the internal network configuration prevented connecting to the weblogic server using the remote url’s.

During the configuration a so called SAML Metadata file is created. This file is requested by SurfConext to get acquainted with the Service Provider. This configuration can change through reconfigurations. So SurfConext requests this through a HTTPS url. This url need to be configured, and also remotely connectable. An option is the htdocs folder of a webserver that is connectable through https. In other SAML2 setups you might need to upload the metadata-file to the identity provider's server.

You also need the SAML metadata of SurfConext. It can be downloaded from:

https://wiki.surfnet.nl/display/surfconextdev/Connection+metadata.

Update Application

The application need to be updated and redeployed to use the weblogic authenticators instead of the native logon-form. To do so the web.xml need to be updated. In the web.xml (in the WEB-INF of the application war file) look for the following part:

<login-config>

<auth-method>FORM</auth-method>

<realm-name>jazn.com</realm-name>

<form-login-config>

<form-login-page>/faces/security/pages/Login.jspx</form-login-page>

<form-error-page>/loginErrorServlet</form-error-page>

</form-login-config>

</login-config>

And replace it with:

<login-config>

<auth-method>BASIC</auth-method>

<realm-name>myrealm</realm-name>

</login-config>

Repackage and redeploy the application to weblogic.

Add a new SAML2IdentityAserter

Here we start with the first steps to configure Weblogic: create a SAML2IdentityAsserter on the Service Provider domain.

- Login to ServiceProvider domain - Weblogic console

- Navigate to “Security Realms”:

- Click on ”myrealm”

- Go to the tab ”Providers–>Authentication” :

- Add a new “SAML2IdentityAsserter”

- Name it for example: “SurfConextIdentityAsserter”:

- Click Ok, Save and activate changes if you're in a production domain (I'm not going to repeat that every time again in the rest of this blog).

- Bounce the domain (All WLServers including AdminServer)

Configure managed server to use SAML2 Service Provider

In this part the managed server(s) serving the application need to be configured for the so called 'federated services'. It need to know how to behave as a SAML2.0 Service Provider.

So perform the following steps:

- Navigate to the managed server, and select the “Federation Services–>SAML 2.0 Service Provider” sub tab:

- Edit the following settings:

| Field | Value |

|---|

| Enabled | Check |

| Preferred Binding | POST |

| Default URL | http://hostname:portname/application-URI.

This URL should be accessible from outside the organization, that is from SurfConext.

|

- Click Save.

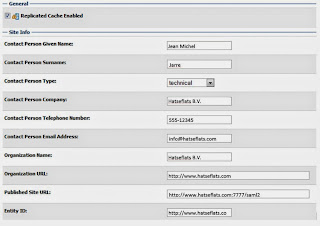

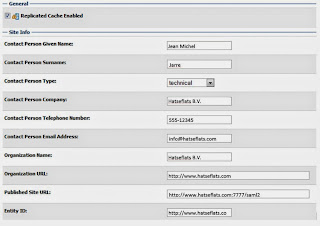

- Navigate to the managed server, and select the “Federation Services–>SAML 2.0 General” sub tab:

- Edit the following settings:

| Field | Value |

|---|

| Replicated Cache Enabled | Uncheck or Check if needed |

| Contact Person Given Name | Eg. Jean-Michel |

| Contact Person Surname | Eg. Jarre |

| Contact Person Type | Choose one from the list, like 'technical'. |

| Contact Person Company | Eg. Darwin-IT Professionals |

| Contact Person Telephone Number | Eg. 555-12345 |

| Contact Person Email Address | info@hatseflats.com |

| Organization Name | Eg. Hatseflats B.V. |

| Organization URL | www.hatseflats.com |

| Published Site URL | http://www.hatseflats.com:7777/saml2

This URL should be accessible from outside the organization, that is from SurfConext. The Identity Provider needs to be able to connect to it. |

| Entity ID | Eg. http://www.hatseflats.com

SurfConext expect an URI with at least a colon (‘:’), usually the URL of the SP. |

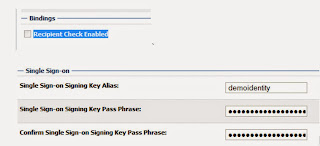

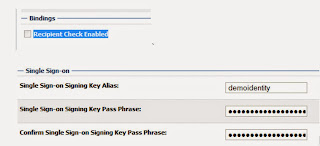

| Recipient Check Enabled | Uncheck.

When checked Weblogic will check the responding Url to the URL in the original request. This could result in a ‘403 Forbidden’ message. |

| Single Sign-on Signing Key Alias | demoidentity

If signing is used the alias of the proper private certificate in the keystore that is configured in WLS is to be provided. |

| Single Sign-on Signing Key Pass Phrase | DemoIdentityPassPhrase |

| Confirm Single Sign-on Signing Key Pass Phrase | DemoIdentityPassPhrase |

- Save the changes and export the IDP metadata into a XML file:

- Restart the server

- Click on 'Publish Meta Data'

- Restart the server

- Click on 'Publish Meta Data'

- Provide a valid path, like /home/oracle/Documents/... and click 'OK'.

- Copy this to a location on a http-server that is remotely connectable through HTTPS and provide the url to SurfConext.

Configure Identity Provider metadata on SAML Service Provider in Managed Server

Add new “Web Single Sign-On Identity Provider Partner” named for instance "SAML_SSO_SurfConext".

- In Admin Console navigate to the myrealm Security Realm and select the “Providers–>Authentication”

- Select the SurfConextIdentityAsserter SAML2_IdentityAsserter and navigate to the “Management” tab:

- Add a new “Web Single Sign-On Identity Provider Partner”

- Name it: SAML_SSO_SurfConext

- Select “SurfConext-metadata.xml”

- Click 'OK'.

- Edit the created SSO Identity Provider Partner “SAML_SSO_SurfConext” and Provide the following settings:

| Field | Value |

|---|

| Name | SAML_SSO_SurfConext |

| Enabled | Check |

| Description | SAML Single Sign On partner SurfConext |

| Redirect URIs | /YourApplication-URI

These are URI’s relative to the root of the server. |

Add SAMLAuthenticationProvider

In this section an Authentication provider is added.

- Navigate to the ‘Providers->Authentication’ sub tab of the ‘myrealm’ Security Realm:

- Add a new Authentication Provider. Name it: ‘SurfConextAuthenticator’ and select as type: 'SAMLAuthenticator'.

Click on the new Authenticator and set the Control Flag to ‘SUFFICIENT’:

- Return to the authentication providers and click on 'Reorder'.

Use the selection boxes and the arrow buttons to reorder the providers as follows:

The SurfConext authenticator and Identity Asserter should be first in the sequence.

Set all other authentication providers to sufficient

The control flag of the Default Authenticator is by default set to ‘REQUIRED’. That means that for an authentication request this one needs to be executed. However, for the application we want the SAMLAuthentication be Sufficient, thus that the other authenticators need not to be executed. So set these other ones (if others including the DefaultAuthenticator exist) to ‘SUFFICIENT’ as well.

Enable debug on SAML

To enable debug messages on SAML, navigate to the '

Debug' tab of the Managed Server:

Expand the nodes ‘weblogic -> security’. Check the node ‘Saml2’ and click 'Enable'.

This will add SAML2 related logging during authentication processes to the server.log.

To disable the logging, check the node or higher level nodes and click 'Disable'.

Deploy the Identity Name Mapper

SurfConnext generates a userid for each connected user. SurfConext provides two options for this: a persistent userid throughout all sessions or a userid per session. Either way, the userid is generated as a GUID that is not registered within the customers application and also on itself not to relate to known users in the application.

In the SAML token however, also the username is provided. To map this to the actual userid that Weblogic provides to the application, an IdentityMapper class is needed.

The class implements a certain interface of weblogic, and uses a custom principal class that implements a weblogic interface as well. The implementation is pretty straightforward. I found an example that uses an extra bean for a Custom Principal. The IdentityMapper class is as follows:

package nl.darwin-it.saml-example;

import com.bea.security.saml2.providers.SAML2AttributeInfo;

import com.bea.security.saml2.providers.SAML2AttributeStatementInfo;

import com.bea.security.saml2.providers.SAML2IdentityAsserterAttributeMapper;

import com.bea.security.saml2.providers.SAML2IdentityAsserterNameMapper;

import com.bea.security.saml2.providers.SAML2NameMapperInfo;

import java.security.Principal;

import java.util.ArrayList;

import java.util.Collection;

import java.util.logging.Logger;

import weblogic.logging.LoggingHelper;

import weblogic.security.service.ContextHandler;

public class SurfConextSaml2IdentityMapper implements SAML2IdentityAsserterNameMapper,

SAML2IdentityAsserterAttributeMapper {

public static final String ATTR_PRINCIPALS = "com.bea.contextelement.saml.AttributePrincipals";

public static final String ATTR_USERNAME = "urn:mace:dir:attribute-def:uid";

private Logger lgr = LoggingHelper.getServerLogger();

private final String className = "SurfConextSaml2IdentityMapper";

@Override

public String mapNameInfo(SAML2NameMapperInfo saml2NameMapperInfo,

ContextHandler contextHandler) {

final String methodName = className + ".mapNameInfo";

debugStart(methodName);

String user = null;

debug(methodName,

"saml2NameMapperInfo: " + saml2NameMapperInfo.toString());

debug(methodName, "contextHandler: " + contextHandler.toString());

debug(methodName,

"contextHandler number of elements: " + contextHandler.size());

// getNames gets a list of ContextElement names that can be requested.

String[] names = contextHandler.getNames();

// For each possible element

for (String element : names) {

debug(methodName, "ContextHandler element: " + element);

// If one of those possible elements has the AttributePrinciples

if (element.equals(ATTR_PRINCIPALS)) {

// Put the AttributesPrincipals into an ArrayList of CustomPrincipals

ArrayList<CustomPrincipal> customPrincipals =

(ArrayList<CustomPrincipal>)contextHandler.getValue(ATTR_PRINCIPALS);

int i = 0;

String attr;

if (customPrincipals != null) {

// For each AttributePrincipal in the ArrayList

for (CustomPrincipal customPrincipal : customPrincipals) {

// Get the Attribute Name and the Attribute Value

attr = customPrincipal.toString();

debug(methodName, "Attribute " + i + " Name: " + attr);

debug(methodName,

"Attribute " + i + " Value: " + customPrincipal.getCollectionAsString());

// If the Attribute is "loginAccount"

if (attr.equals(ATTR_USERNAME)) {

user = customPrincipal.getCollectionAsString();

// Remove the "@DNS.DOMAIN.COM" (case insensitive) and set the username to that string

if (!user.equals("null")) {

user = user.replaceAll("(?i)\\@CLIENT\\.COMPANY\\.COM", "");

debug(methodName, "Username (from loginAccount): " + user);

break;

}

}

i++;

}

}

// For some reason the ArrayList of CustomPrincipals was blank - just set the username to the Subject

if (user == null || "".equals(user)) {

user = saml2NameMapperInfo.getName(); // Subject = BRID

debug(methodName, "Username (from Subject): " + user);

}

return user;

}

}

// Just in case AttributePrincipals does not exist

user = saml2NameMapperInfo.getName(); // Subject = BRID

debug(methodName, "Username (from Subject): " + user);

debugEnd(methodName);

// Set the username to the Subject

return user;

// debug(methodName,"com.bea.contextelement.saml.AttributePrincipals: " + arg1.getValue(ATTR_PRINCIPALS));

// debug(methodName,"com.bea.contextelement.saml.AttributePrincipals CLASS: " + arg1.getValue(ATTR_PRINCIPALS).getClass().getName());

// debug(methodName,"ArrayList toString: " + arr2.toString());

// debug(methodName,"Initial size of arr2: " + arr2.size());

}

/* public Collection<Object> mapAttributeInfo0(Collection<SAML2AttributeStatementInfo> attrStmtInfos, ContextHandler contextHandler) {

final String methodName = className+".mapAttributeInfo0";

if (attrStmtInfos == null || attrStmtInfos.size() == 0) {

debug(methodName,"CustomIAAttributeMapperImpl: attrStmtInfos has no elements");

return null;

}

Collection<Object> customAttrs = new ArrayList<Object>();

for (SAML2AttributeStatementInfo stmtInfo : attrStmtInfos) {

Collection<SAML2AttributeInfo> attrs = stmtInfo.getAttributeInfo();

if (attrs == null || attrs.size() == 0) {

debug(methodName,"CustomIAAttributeMapperImpl: no attribute in statement: " + stmtInfo.toString());

} else {

for (SAML2AttributeInfo attr : attrs) {

if (attr.getAttributeName().equals("AttributeWithSingleValue")){

CustomPrincipal customAttr1 = new CustomPrincipal(attr.getAttributeName(), attr.getAttributeNameFormat(),attr.getAttributeValues());

customAttrs.add(customAttr1);

}else{

String customAttr = new StringBuffer().append(attr.getAttributeName()).append(",").append(attr.getAttributeValues()).toString();

customAttrs.add(customAttr);

}

}

}

}

return customAttrs;

} */

public Collection<Principal> mapAttributeInfo(Collection<SAML2AttributeStatementInfo> attrStmtInfos,

ContextHandler contextHandler) {

final String methodName = className + ".mapAttributeInfo";

Collection<Principal> principals = null;

if (attrStmtInfos == null || attrStmtInfos.size() == 0) {

debug(methodName, "AttrStmtInfos has no elements");

} else {

principals = new ArrayList<Principal>();

for (SAML2AttributeStatementInfo stmtInfo : attrStmtInfos) {

Collection<SAML2AttributeInfo> attrs = stmtInfo.getAttributeInfo();

if (attrs == null || attrs.size() == 0) {

debug(methodName,

"No attribute in statement: " + stmtInfo.toString());

} else {

for (SAML2AttributeInfo attr : attrs) {

CustomPrincipal principal =

new CustomPrincipal(attr.getAttributeName(),

attr.getAttributeValues());

/* new CustomPrincipal(attr.getAttributeName(),

attr.getAttributeNameFormat(),

attr.getAttributeValues()); */

debug(methodName, "Add principal: " + principal.toString());

principals.add(principal);

}

}

}

}

return principals;

}

private void debug(String methodName, String msg) {

lgr.fine(methodName + ": " + msg);

}

private void debugStart(String methodName) {

debug(methodName, "Start");

}

private void debugEnd(String methodName) {

debug(methodName, "End");

}

}

The commented method ‘public Collection<Object> mapAttributeInfo0’ is left in the source as an example method.

The CustomPrincipal bean:

package nl.darwin-it.saml-example;

import java.util.Collection;

import java.util.Iterator;

import weblogic.security.principal.WLSAbstractPrincipal;

import weblogic.security.spi.WLSUser;

public class CustomPrincipal extends WLSAbstractPrincipal implements WLSUser{

private String commonName;

private Collection collection;

public CustomPrincipal(String name, Collection collection) {

super();

// Feed the WLSAbstractPrincipal.name. Mandatory

this.setName(name);

this.setCommonName(name);

this.setCollection(collection);

}

public CustomPrincipal() {

super();

}

public CustomPrincipal(String commonName) {

super();

this.setName(commonName);

this.setCommonName(commonName);

}

public void setCommonName(String commonName) {

// Feed the WLSAbstractPrincipal.name. Mandatory

super.setName(commonName);

this.commonName = commonName;

System.out.println("Attribute: " + this.getName());

// System.out.println("Custom Principle commonName is " + this.commonName);

}

public Collection getCollection() {

return collection;

}

public String getCollectionAsString() {

String collasstr;

if(collection != null && collection.size()>0){

for (Iterator iterator = collection.iterator(); iterator.hasNext();) {

collasstr = (String) iterator.next();

return collasstr;

}

}

return "null";

}

public void setCollection(Collection collection) {

this.collection = collection;

// System.out.println("set collection in CustomPrinciple!");

if(collection != null && collection.size()>0){

for (Iterator iterator = collection.iterator(); iterator.hasNext();) {

final String value = (String) iterator.next();

System.out.println("Attribute Value: " + value);

}

}

}

@Override

public int hashCode() {

final int prime = 31;

int result = super.hashCode();

result = prime * result + ((collection == null) ? 0 : collection.hashCode());

result = prime * result + ((commonName == null) ? 0 : commonName.hashCode());

return result;

}

@Override

public boolean equals(Object obj) {

if (this == obj)

return true;

if (!super.equals(obj))

return false;

if (getClass() != obj.getClass())

return false;

CustomPrincipal other = (CustomPrincipal) obj;

if (collection == null) {

if (other.collection != null)

return false;

} else if (!collection.equals(other.collection))

return false;

if (commonName == null) {

if (other.commonName != null)

return false;

} else if (!commonName.equals(other.commonName))

return false;

return true;

}

}

Package the classes as a java archive (jar) and place it in a folder on the weblogic server. For instance $DOMAIN_HOME/lib.

Although the $DOMAIN_HOME/lib is in the classpath for many usages, for this usage the jar file is not picked-up by the class-loaders. Probably due to the class-loader hierarchy.

To have the jar-file (SurfConextSamlIdentityMapper.jar) in the system class path, add the complete path to the jar file to the classpath on the Startup-tab on both the AdminServer as well as the Managed server. In this the AdminServer is needed, since class is configured through the Realm, and during the configuration the existence of the class is checked. Apparently it is required to also add the weblogic.jar before the SurfConextSamlIdentityMapper.jar to the startup-classpath.

Then restart the AdminServer as well as the managed servers.

Configure the Identity Name Mapper

Now the Identity Name mapper class can be configured:

- In Admin Console navigate to the myrealm Security Realm and select the “Providers–>Authentication”

- Select the SurfConextIdentityAsserter SAML2_IdentityAsserter and navigate to the “Management” tab:

-

Edit the created SSO Identity Provider Partner “SAML_SSO_SurfConext”.

Provide the following settings:

| Field | Value |

|---|

| Identity Provider Name Mapper Class Name | nl.darwin-it.saml-example.SurfConextSaml2IdentityMapper |

Test the application

At this point the application can be tested. Browse using the external connected PC to the application using the remote URL. For instance:

https://www.hatseflats.com:7777/YourApplication-URI.

If all is well, the browser is redirected to SurfConext’s WhereAreYouFrom page. Choose the following provider:

Connect as

‘student1’ with password ‘student1’ (or one of the other test creditials like student2,

student3, see

https://wiki.surfnet.nl/display/surfconextdev/Test+and+Guest+Identity+Providers).

After a

succesfull logon, the browser should be redirected to the application. The choosen credential should of course be known

as a userid in the application.

Conclusion

This is one of the bigger stories on this blog. I actually edited the configuration document as a blog entry. I hope you'll find it usefull. With this blog you have a complete how-to for the ServiceProvider part for an ServiceProvider Initiated SSO setup.

SAML2 seemed complicated to me at first. And under the covers it still might be. But it turns out that Weblogic11g has a great implementation for it, that is neatly configurable. It's a little pity that you need a mapper class for the identity-mapping. It would be nice if you could configure the attribute-value to be returned as a userid. But the mapper class is not that complicated.