This article feels like I should have written years ago. As it is, I haven't, but let's do it anyway.

A few weeks ago a (somewhat older) question on community.oracle.com caught my attention. It was about how to do logging in Oracle SOA Suite.

This very much possible, and it can be simply done using an Embedded Java activity. However, if you want to have multiple loggings in a larger BPEL process, or have multiple BPEL components in a composite with multiple loggings scattered all over them, then Embedded Java activities aren't that practical.

So, I have developed a bit more sophisticated solution. For just one logging, it is a bit overdone, but I find it more practical with multiple loggings.

It starts with a quite simple Log wrapper class, that I added to GitHub. It is a wrapper around Java Util Logging, and helps with instantiating with a Logger instance. One of the constructors takes in a compositeName and componentName:

public class Log {

private static final String BASE_PACKAGE="oracle.soa.bpel";

private static Logger log;

private String className;

...

public Log(Class loggingClass) {

super();

setClassName(loggingClass.getName());

log = Logger.getLogger(getClassName());

}

public Log(String loggingClass) {

super();

setClassName(loggingClass);

log = Logger.getLogger(getClassName());

}

public Log(String compositeName, String componentName) {

super();

String loggingClass = BASE_PACKAGE+"."+compositeName+"."+componentName;

setClassName(loggingClass);

log = Logger.getLogger(getClassName());

}

An important aspect here is the static variable BASE_PACKAGE which is set to "oracle.soa.bpel". Which I'll get back to in a minute. The constructor uses this, and the compositeName and compentName to build up a sort of className, that it prefixes with the BASE_PACKAGE.

It also has some logging methods, that requires a methodName, that it uses as an extra identifier for the logging, added to the full class Name.

This class I 'deployed' to a jar file. This makes it reusable in multiple composites, while the source is versioned only once.

Add it to the SCA_INF/lib folder of your composite:

But you could probably also add it to the oracle.soa.ext_11.1.1 folder in your $MW_HOME/soa/soa/modules folder and run the Ant script there:After running Ant in that folder, you should restart the server. The Ant script will add all the jar files in that folder, including yours, to the manifest file of the oracle.soa.ext.jar file in that folder. Doing so, it will be appended to the Classpath of SOA Suite.

To use this in your BPEL, it is important to add the following line at the beginning:

<import location="nl.darwinit.soautils.logging.Log" importType="http://schemas.oracle.com/bpel/extension/java"/>

Like this:

Having done that you can use the Log class in the Embbeded Java activity. To begin with, I find it usefull to add an Embedded Java to a scope which contains simple xsd:string based variables. Using an Assign you can easily assing proper values to the local variables:

The compositeName and componentName variables can be filled with ora:getCompositeName() and ora:getComponentName() respectively. Doing so makes it easier to access these values in an Embedded Java activity. The Java snippet Embedded Java in my example project is:

String compositeName = (String) getVariableData("compositeName");

String componentName = (String) getVariableData("componentName");

String text = (String) getVariableData("text");

String methodName= (String) getVariableData("methodName");

Log log = new Log(compositeName,componentName);

String message="**** BPEL "+methodName +" " + text +" ****";

log.info(methodName, message);

addAuditTrailEntry(message);The addAuditTrailEntry() shown in this snippet is an API that adds the message to the flowtrace also:

So not necessary for logging and also not specifically in scope of this article, but good to mention.

The message build up in this snippet is a concatenation of: "**** BPEL "+methodName +" " + text +" ****". This maybe handy in the AuditTrail, but in the log you may want to show just the text, like: log.info(methodName, text).

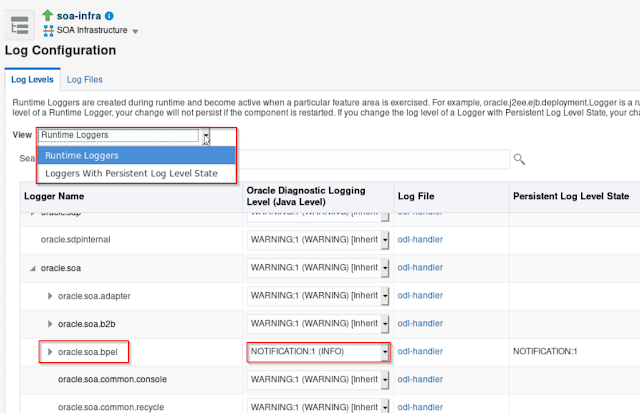

Earlier I mentioned the BASE_PACKAGE variable in the Log class. This refers to the oracle.soa.bpel log-appender. This can be configured in the soa-infra Log Configuration:

And then:

You could add a custom Logger, but it is easier to use an existing one. And to me it makes sense to use the oracle.soa.bpel Logger. If you would choose to use another logger, you would need to change the BASE_PACKAGE variable in the class.

Make sure it has a severity or Log Level low enough to cater for your logging. Set it to the Runtime Logger, but for persistence purpose you probably would need to add it to the "Loggers with Persistent Log Level State". For changing the Runtime logger, you would not need to restart the server. You do need to make sure that the "minimum severity to log" on the server in the WebLogic console.

Before you test, it can be handy to "tail" the diagnostic log as follows:

[oracle@ol7vm logs]$ tail -f DefaultServer-diagnostic.log |grep oracle.soa.bpel

I added my Demo BPEL process also to GitHub. If you test it, the output will be as follows:

So that works! Easy, right?

Now, this works for one simple Log in a BPEL process. But what about if you want to trace the flow using multiple Logs. And maybe even, in fault handlers log particular errors. The scope I introduced can be converted to a subProcess:

I renamed the SubProcess to Log and then you can remove the Assign:

The scope is replaced with a call activity, that can be renamed. The scope variables now function as call arguments:

You can copy&paste this and rename it to reflect for instance a LogEnd activity:

Testing this, gives the following output:

As can be seen, now a simple log is done using a simple call activity. In this example the Log subprocess is within the same BPEL process, so you could move the setting of the componentName and compositeName variables in an assign in the subProcess, re-enstating the original assign.

However, you could of course move the Embedded Subprocess to a Reusable Subprocess. And then it might be usefull to be able to provide at least the componentName as an argument.

I think it is not so useful to put it in a separate BPEL process that can be called from external composites. In that case you would need to do an invoke with an accompanying assign and variable declarations for each Logging. So I would prefer to define either an embedded or a reusable subprocess for each composite/bpel that you want to do logging in.

Although I experience this article a few years late, I hope it does help.