Introduction

BPEL Process Manager is made to orchestrate services. It's a good tool to sequence the handling of services into a Business Process. In most cases it is evident which services you need to invoke at runtime. But there are cases that you want to be able to choose dynamically which process you want to call. For instance when you want to call services based on the content of a datamodel. You might have services with about the same purpose but with a difference based on a preference. For instance in a certain case different tasks have to be invoked and which specific task in which case is registered in a database. Possibly the task-definitions can be extended with extra tasks.

You can do this in the Oracle BPEL Process Manager using Dynamic Partnerlinks. On OTN you can find the BPEL Cookbook which handles this subject. In the Cookbook-case the writer starts from the Orderbooking Tutorial, where you can do a loan-request to

different loan providers. The idea is then that you might want to add loan-providers without changing your process. However, I found that this article is quite complicated and at least pretty complicated to translate into a particular situation.

But a Dynamic Partnerlink solution turns out to be quite easy to implement. In this article I'll tell you how.

Prerequisites

There are a few prerequisites, actually there is one major prerequisite. And that is that every process that you want to call has to be based on exactly the same WSDL. Not just the request and response documents based on a prescribed XSD. But the WSDL has to be literally the same. The message types, the namespaces, port types, bindings. Actually the only thing that at deployment will differ is the endpoint-url.

People that have experience in Oracle Workflow calling custom pl/sql workflow functions (standalone or the OWF embedded in E-BusinessSuite), rule-functions in OWF Business Event System or Oracle Streams Advanced Queueing's pl/sql call-back notification functions, may understand the importance of this prerequisite. Java Programmers can see this as implementing an interface-class.

Then there 3 things to do:

Create a “template” process, or just the first process of a set that have to be called;

Create the calling process, with a partnerlink based on the template process and an initialize-and-invocation-sequence;

Create additional processes.

The template process

The template process you can build exactly the same as any other process. Keep the following things in mind:

Create request and response documents that adapt to the need of every possible service that you want to be able to call. The request document need to give enough information for each service to find out what it needs to do. With the response

document each process need to be able to pass all the relevant information for the calling process to act upon.

Create a separate XSD for the request and response document and put it on a http-server (for instance the document-root on the Oracle HTTP Server of the SoaSuite. It's not recommended to use the xmllib folder of the BPEL process manager for it. This might bring up complications at activating bpel-processes

at start-up.

Think of a smart generic name for the service. Since each other process/service need to be based on the exact wsdl, the messagetypes, porttypes, etc. need to have a naming

that resembles a valid function within each other process.

Since each process will have the same WSDL think an extra time about if it has to be a synchronous or asynchronous service.

After creation of the template process, deploy it to the development server. At that time the WSDL is adapted with the particular endpoint.

Creating the Invocation Process

The invocation process is created as another BPEL process.

First step is to create a partnerlink. This partnerlink should be based on the template process that is deployed to the development server.

Then add the following namespace to your bpel process:

xmlns:wsa="http://schemas.xmlsoap.org/ws/2003/03/addressing"

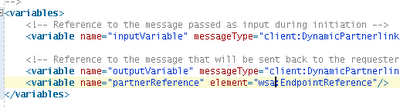

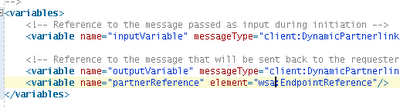

Based on this namespace, add an “EndpointReference” variable:

<variable name="partnerReference" element="wsa:EndpointReference"> </variable>

This variable need to be initialized. On contrary to the BPEL Cookbook you only need to initialize the “address” node. If you don't initialize it or if you initialize also the service node you might run into a java null-pointer exception. At least I did.

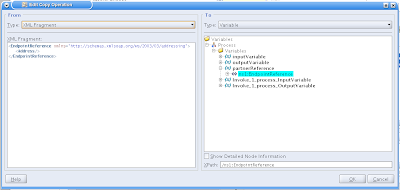

You initialize the endpoint-reference by copying the following xml-fragment to the endpoint reference:

<EndpointReference

xmlns="http://schemas.xmlsoap.org/ws/2003/03/addressing">

<Address/>

</EndpointReference>

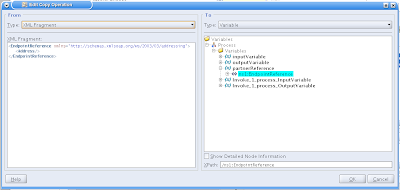

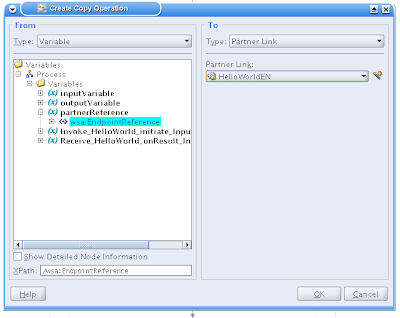

In Jdeveloper this looks like:

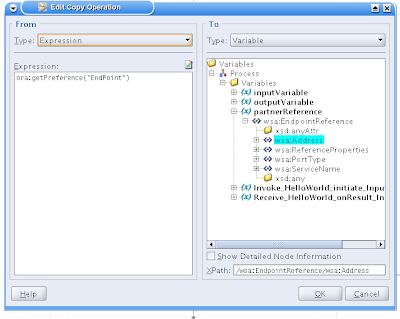

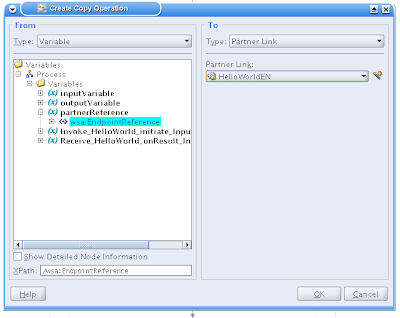

Then you copy the determined endpoint url into the address node of the EndpointReference variable:

<copy>

<from

expression='ora:getPreference("EndPoint")'/>

<to variable="partnerReference"

query="/wsa:EndpointReference/wsa:Address"/>

</copy>

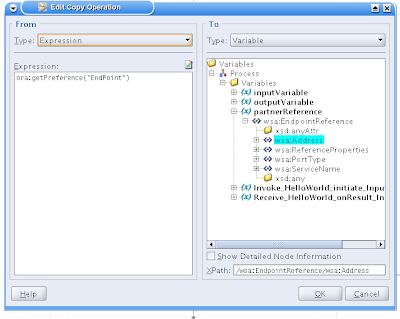

In JDeveloper this looks like:

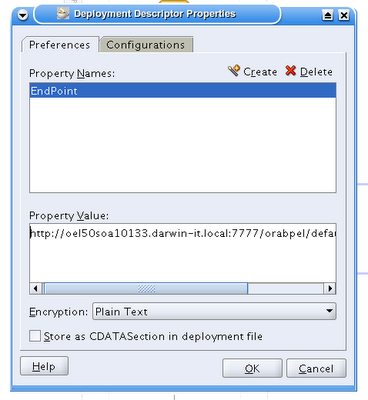

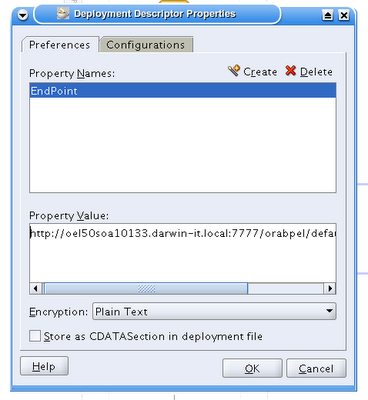

In this case I get the address from a preference from the deployment descriptor.

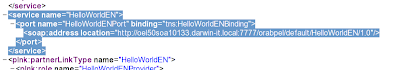

The actual address can be determined from the WSDL as it is deployed on the server, In my

case:

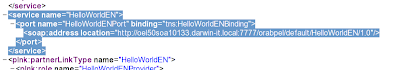

<service name="HelloWorldEN">

<port name="HelloWorldENPort"

binding="tns:HelloWorldENBinding">

<soap:address location="http://oel50soa10133.darwin-it.local:7777/orabpel/default/HelloWorldEN/1.0"/>

</port>

</service>

The content of the location attribute of the soap:address node is the url that has to becopied into the endpoint-reference variable. In this example it is put it in the EndPoint deployment preference:

Having done that, you'll need a partnerlink to the template project. An invoke activity (and with asynchtonous processes a receive activity). Then before doing the invoke you need to copy the Endpoint Reference to the partnerlink:

<copy>

<from variable="partnerReference" query="/wsa:EndpointReference"/>

<to partnerLink="HelloWorldEN"/>

</copy>

And that's about it. You can deploy this and see if it works, by starting the process and then doing the test again with a “wrecked” end-point url. If the url is broken, the invoke shoul turn into an error.

Create additional processes

The next thing to do is to create additional services, based on the same WSDL. For BPEL you'll have to create another BPEL Project. Then perform the following steps:

Copy WSDL

Take the WSDL of the template project and copy and paste it over the WSDL of your newly created BPEL project.

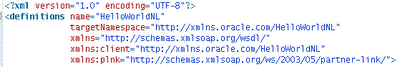

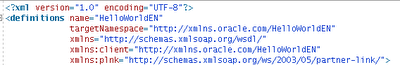

Mark that in the old situation the definitions tag of your newly created BPEL project looks like:

<definitions name="HelloWorldNL"

targetNamespace="http://xmlns.oracle.com/HelloWorldNL"

xmlns="http://schemas.xmlsoap.org/wsdl/"

xmlns:client="http://xmlns.oracle.com/HelloWorldNL"

xmlns:plnk="http://schemas.xmlsoap.org/ws/2003/05/partner-link/">

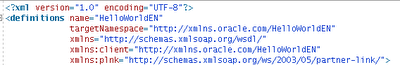

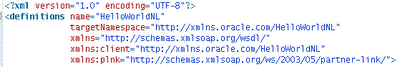

After pasting the content of the template-WSDL over it, it will look like:

After pasting the content of the template-WSDL over it, it will look like:

<definitions name="HelloWorldEN"

targetNamespace="http://xmlns.oracle.com/HelloWorldEN"

xmlns="http://schemas.xmlsoap.org/wsdl/"

xmlns:client="http://xmlns.oracle.com/HelloWorldEN"

xmlns:plnk="http://schemas.xmlsoap.org/ws/2003/05/partner-link/">

Change the Namespaces of your BPEL process

Open the bpel process source (in JDeveloper click on the Source tab). The namespaces on top are:

<process name="HelloWorldNL"

targetNamespace="http://xmlns.oracle.com/HelloWorldNL"

xmlns="http://schemas.xmlsoap.org/ws/2003/03/business-process/"

xmlns:client="http://xmlns.oracle.com/HelloWorldNL"

xmlns:ora="http://schemas.oracle.com/xpath/extension"

xmlns:orcl="http://www.oracle.com/XSL/Transform/java/oracle.tip.pc.services.functions.ExtFunc"

xmlns:xp20="http://www.oracle.com/XSL/Transform/java/oracle.tip.pc.services.functions.Xpath20"

xmlns:ldap="http://schemas.oracle.com/xpath/extension/ldap"

xmlns:bpelx="http://schemas.oracle.com/bpel/extension"

xmlns:bpws="http://schemas.xmlsoap.org/ws/2003/03/business-process/">

Change the client and the target namespace to the namespaces matching with

the WSDL:

<process name="HelloWorldNL"

targetNamespace="http://xmlns.oracle.com/HelloWorldEN"

xmlns="http://schemas.xmlsoap.org/ws/2003/03/business-process/"

xmlns:client="http://xmlns.oracle.com/HelloWorldEN"

xmlns:ora="http://schemas.oracle.com/xpath/extension"

xmlns:orcl="http://www.oracle.com/XSL/Transform/java/oracle.tip.pc.services.functions.ExtFunc"

xmlns:xp20="http://www.oracle.com/XSL/Transform/java/oracle.tip.pc.services.functions.Xpath20"

xmlns:ldap="http://schemas.oracle.com/xpath/extension/ldap"

xmlns:bpelx="http://schemas.oracle.com/bpel/extension"

xmlns:bpws="http://schemas.xmlsoap.org/ws/2003/03/business-process/">

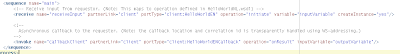

Changing the port types

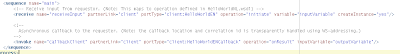

Changing the port typesThe port types have to be changed also. The port types are referenced in the invoke and the receive steps.

<!-- Receive input from requestor. (Note: This maps to operation defined in HelloWorldNL.wsdl) -->

<receive name="receiveInput" partnerLink="client" portType="client:HelloWorldNL" operation="initiate" variable="inputVariable" createInstance="yes"/>

<!-- Asynchronous callback to the requester. (Note: the callback location and correlation id is transparently handled using WS-addressing.) -->

<invoke name="callbackClient" partnerLink="client" portType="client:HelloWorldNLCallback" operation="onResult" inputVariable="outputVariable"/>

Change the portType-attributes according to the portType name-attributes in the WSDL:

<sequence name="main">

<!-- Receive input from requestor. (Note: This maps to operation defined in elloWorldNL.wsdl) -->

<receive name="receiveInput" partnerLink="client" portType="client:HelloWorldEN"

operation="initiate" variable="inputVariable" createInstance="yes"/>

<!-- Asynchronous callback to the requester. (Note: the callback location and correlation id is transparently handled using WS-addressing.) -->

<invoke name="callbackClient" partnerLink="client" portType="client:HelloWorldENCallback"

operation="onResult" inputVariable="outputVariable"/>

</sequence>

Partnerlink Type and Roles

In the bottom of the WSDL you'll find a partnerlinkType element. It has two roles defined, one for each portType. In the BPEL process source you'll have a client-partnerlink:

<partnerLink name="client" partnerLinkType="client:HelloWorldNL" myRole="HelloWorldNLProvider" partnerRole="HelloWorldNLRequester"/>

Here you'll see also the corresponding type and roles. Change them according to the WSDL:

<partnerLink name="client" partnerLinkType="client:HelloWorldEN" myRole="HelloWorldENProvider" partnerRole="HelloWorldENRequester"/>

Input and Output Variables

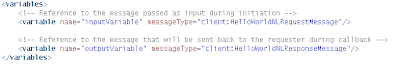

The input and output variables of the bpel process are based on the message types in the WSDL.

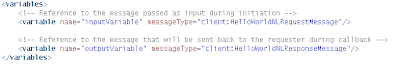

<variables>

<!-- Reference to the message passed as input during initiation -->

<variable name="inputVariable" messageType="client:HelloWorldNLRequestMessage"/>

<!-- Reference to the message that will be sent back to the requester during callback -->

<variable name="outputVariable" messageType="client:HelloWorldNLResponseMessage"/>

</variables>

Of course these should be changed according to the WSDL too:

Of course these should be changed according to the WSDL too:

<variables>

<!-- Reference to the message passed as input during initiation -->

<variable name="inputVariable" messageType="client:HelloWorldENRequestMessage"/>

<!-- Reference to the message that will be sent back to the requester during callback -->

<variable name="outputVariable" messageType="client:HelloWorldENResponseMessage"/>

</variables>

If the message types in the WSDL are based on local XSD's (with in the project, not on a HTTP-Server) the you have to copy the XSD to your new project too. But I would especially in this case recommend to put the XSD's to a HTTP-Server. In that case a change in the XSD would immediately count for all the processes that are based on them.

Finish/wrap-up

This should be about it. Now you can implement your new BPEL process. Then deploy it and test your dynamic invocation by copying the endpoint of the new BPEL process and register it in your Invocation Process.